In our previous article, We discussed Fully Qualified Domain Name (FQDN) egress filtering in GCP using FQDN objects in the firewall policy rules. The firewall policy rules are applied to the VPC network which enforces the egress filtering to all the workloads.

The Google Kubernetes Engine (GKE) team recently announced support for Fully Qualified Domain Name (FQDN) egress filtering in GKE Dataplane V2. This feature provides the flexibility to control egress communication between all or a subset of Pods and resources outside the GKE cluster using Fully Qualified Domain Names (FQDNs).

This blog will show you how to use the new FQDN Network Policy to control egress communication between Pods and resources outside the GKE cluster.

The FQDN Network Policy is Google’s proprietary implementation specific to GKE, and Google has not published any information related to how the implementation will change if and when the Kubernetes project publishes a standard.

Requirements and limitations

- The FQDN Network Policy is currently available in Preview only for standard clusters using GKE Dataplane V2.

- GCP provides no SLAs or technical support commitments during the preview period.

- FQDN network policy is a paid feature, but no payment is required during the Preview period.GCP has not published any information related to the pricing model, and we need to wait for the GA announcement.

- The GKE cluster version must be 1.26.4-gke.500 or 1.27.1-gke.400 and later.

- The cluster must use kube-dns or Cloud DNS as one of the DNS providers.

- Windows node pools and Anthos Service Mesh are not supported.

- Traffic to a ClusterIP or Headless Service as an egress destination is not allowed in FQDNNetworkPolicy because GKE translates the Service virtual IP address (VIP) to backend Pod IP addresses before evaluating Network Policy rules.

- You cannot use CNAME to program IP addresses in the policy enforcement module on GKE. You must instead use the A/AAAA records the CNAME is referencing and use those directly in the policy.

Please refer to the official documentation for all current limitations.

Setup a GKE cluster

Create a new GKE standard cluster with Dataplane v2 and FQDN network policy enabled.

gcloud beta container clusters create fqdn-network-policy-demo-cluster \ --region us-central1 \ --enable-fqdn-network-policy \ --cluster-version=1.26.5-gke.1200 \ --enable-dataplane-v2

GKE Dataplane V2 is implemented using Cilium, and Kubernetes NetworkPolicy is always on in clusters with GKE Dataplane V2. You don’t have to install and manage third-party software add-ons such as Calico to enforce network policy.

Deploy Sample Application

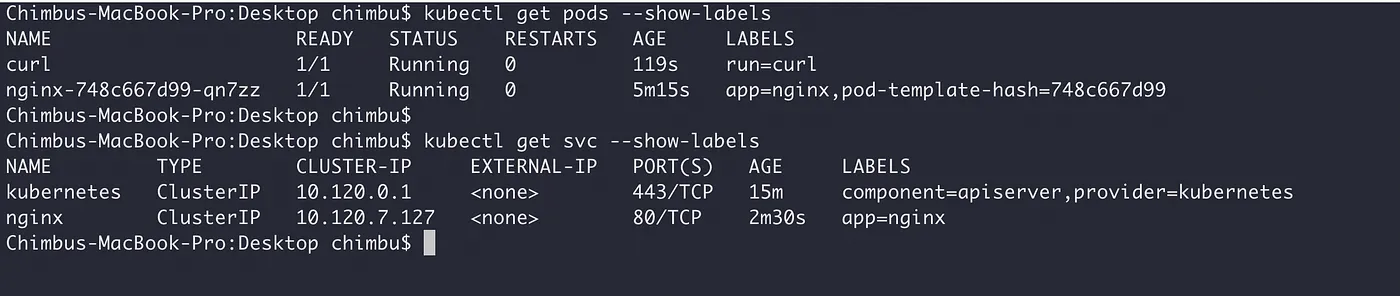

Deploy a sample nginx and curl application in the default namespace.

#create nginx deployment kubectl create deployment nginx --image nginx #Expose the nginx deployment kubectl expose deployment nginx --port 80 --target-port 80 #create curl test pod kubectl run curl --image curlimages/curl --command sleep 3600

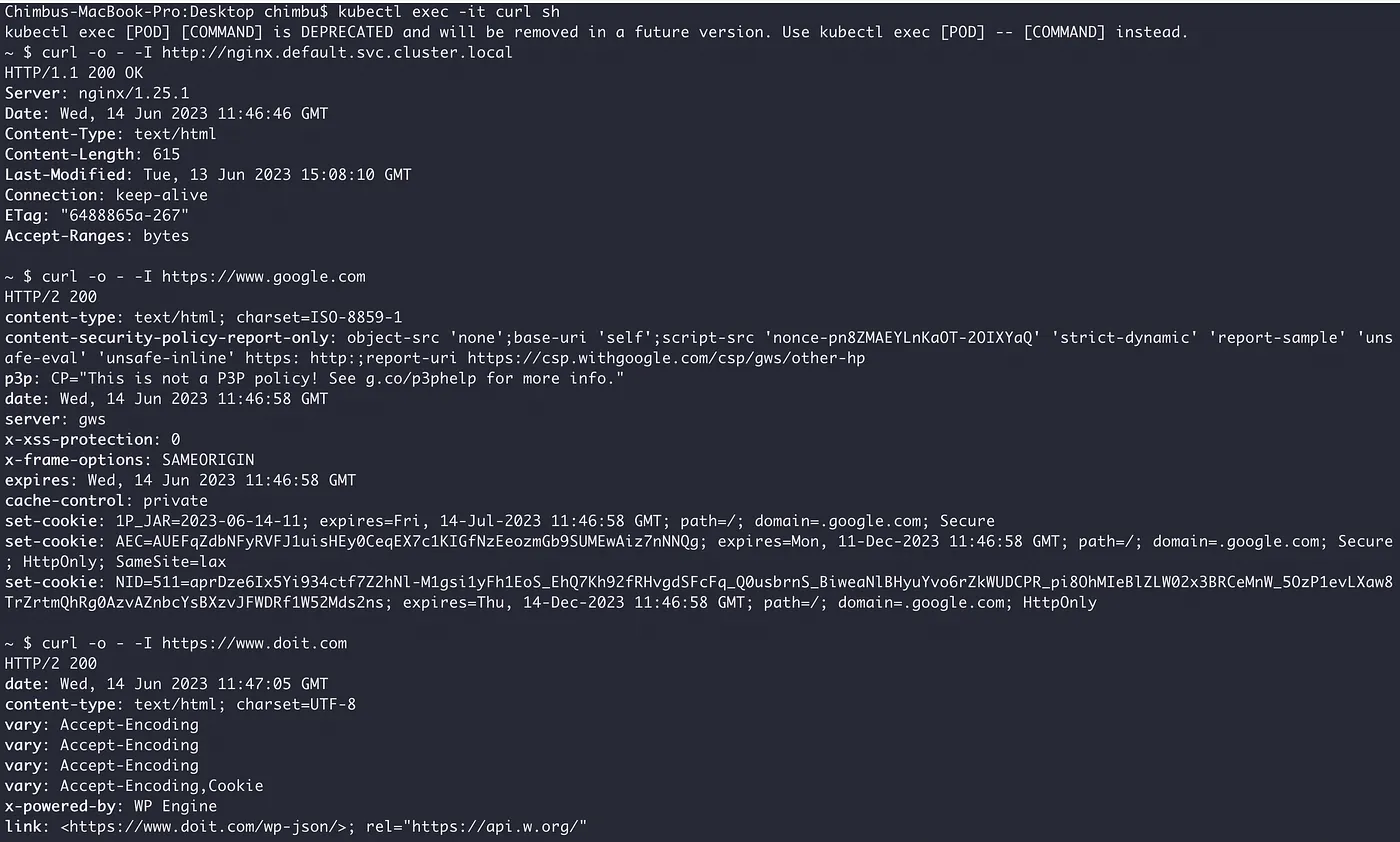

Test the internal and internet endpoints from the curl pod.

From the above test results, we can see the curl pod has access to both the internal nginx ClusterIP service and internet endpoints.

Setup FQDN Network Policy

The FQDN egress filtering is configured using the FQDNNetworkPolicy CRD.

An active FQDNNetworkPolicy that selects workloads does not affect the ability of workloads to make DNS requests. Commands such as nslookup or dig work on any domain without being affected by the policy. However, subsequent requests to the IP address backing domains not in the allowlist would be dropped.

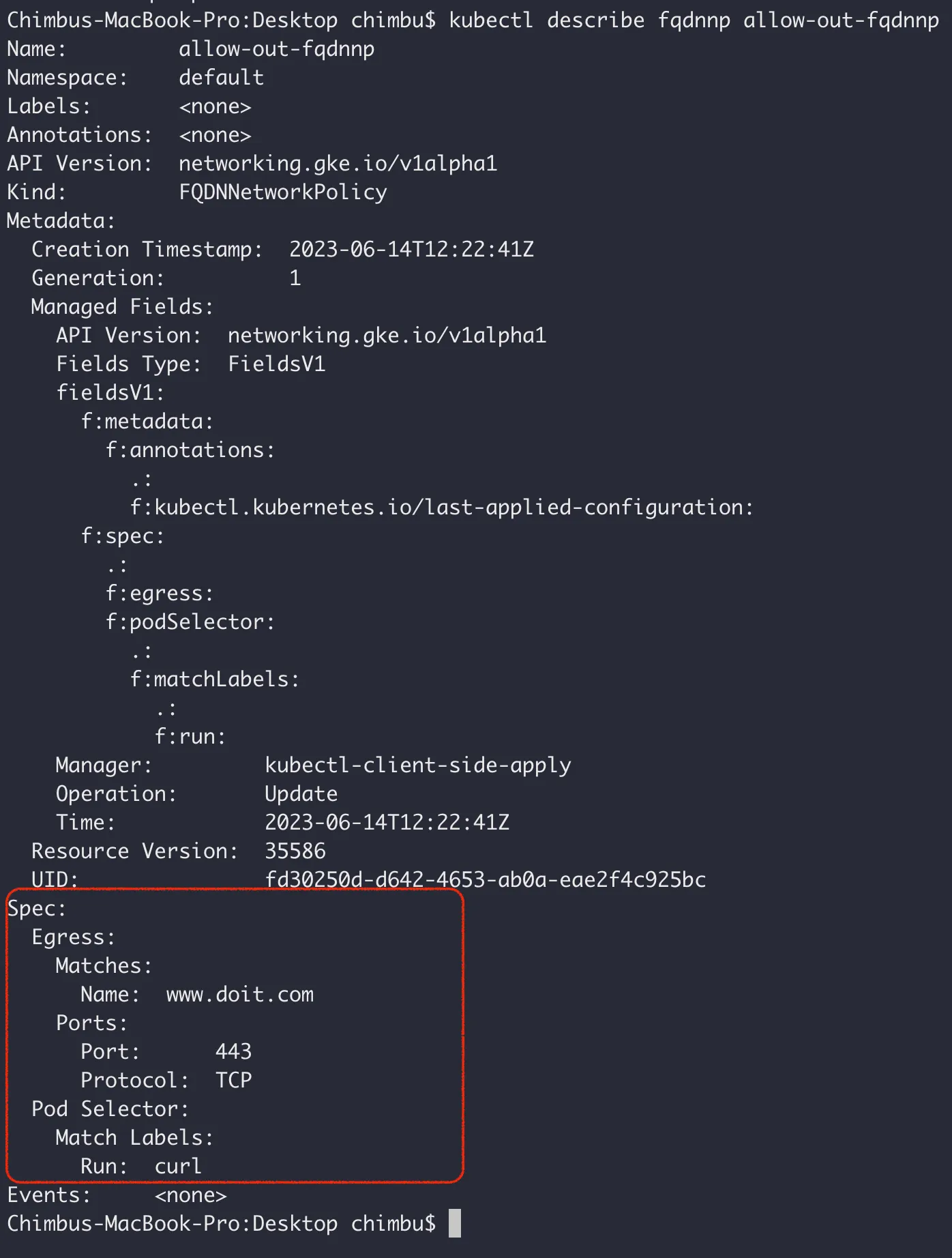

Deploy the below sample policy for curl pod, which allows egress requests only to the domain www.doit.com.

cat <<EOF | kubectl apply -f -

---

apiVersion: networking.gke.io/v1alpha1

kind: FQDNNetworkPolicy

metadata:

name: allow-out-fqdnnp

spec:

podSelector:

matchLabels:

run: curl #labels assigned to the pod

egress:

- matches:

- name: "www.doit.com" #The fully qualified domain name. IP addresses provided by the nameserver associated with www.doit.com are allowed. You must specify either name or pattern, or both.

ports:

- protocol: "TCP" #optional field to allow only https traffic

port: 443

EOF

Verify that the network policy is applied to the correct workload.

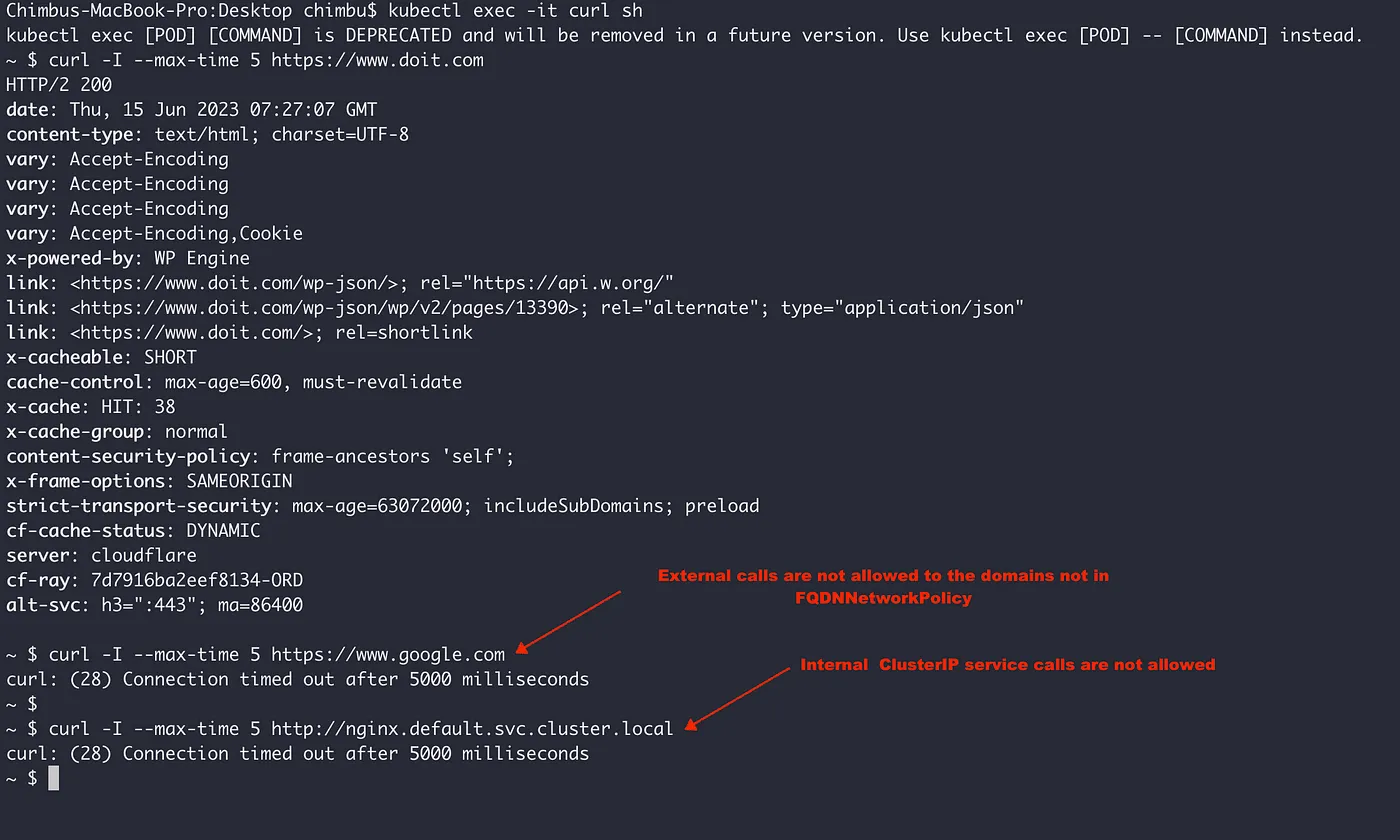

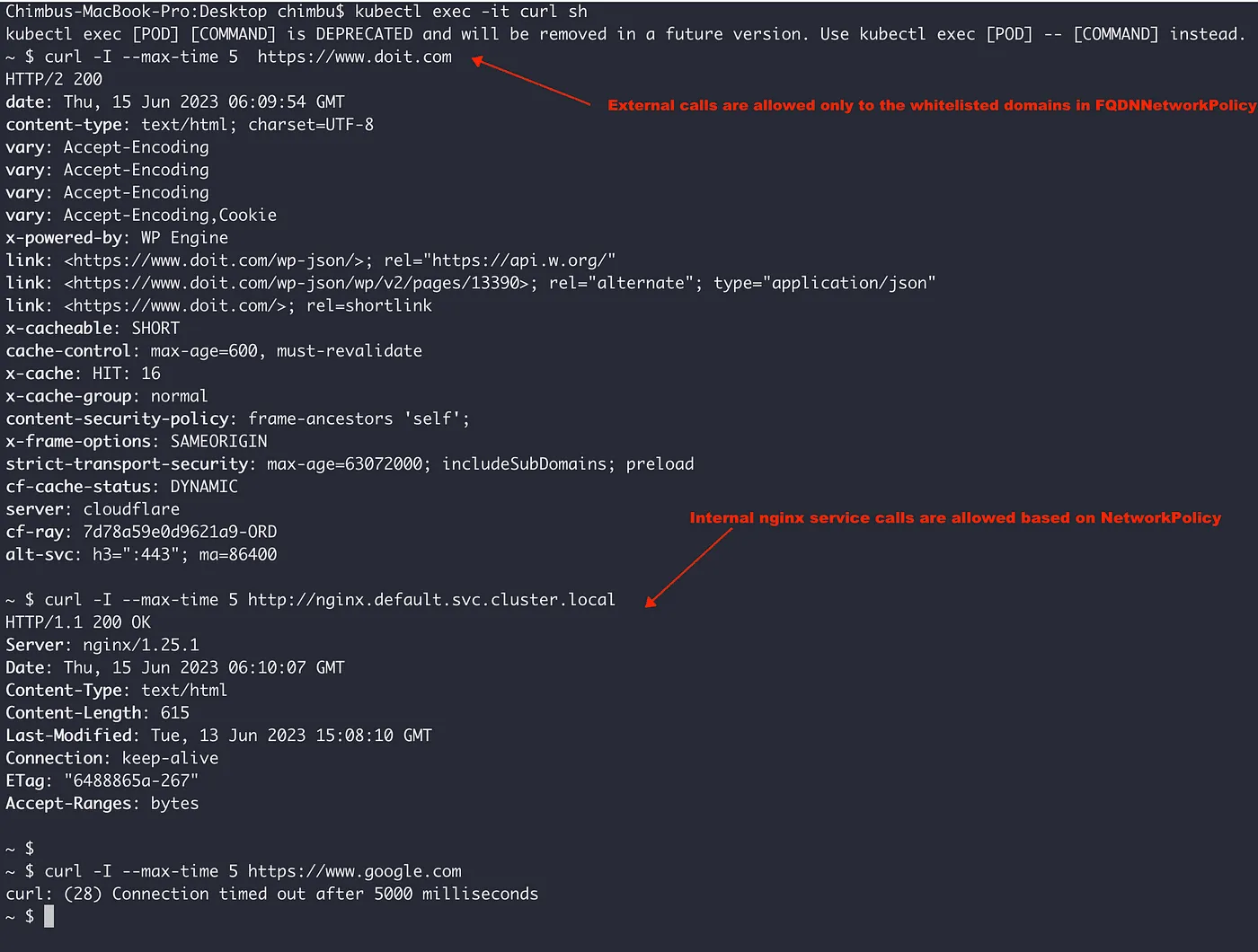

Test the internal and external endpoints from the curl pod.

From the above test results, we can observe that requests are allowed only to https://www.doit.com and other domains are not allowed, including requests to the ClusterIP service. So if you want to allow egress requests ClusterIP or Pod IP, then a Kubernetes label-based NetworkPolicy is required.

When both a FQDNNetworkPolicy and a NetworkPolicy apply to the same Pod, egress traffic is allowed as long as it matches one of the policies. There is no hierarchy between egress IP address or label-based policies and FQDN network policies.

Deploy the below kubernetes labels based NetworkPolicy to allow egress requests to ClusterIP service.

cat <<EOF | kubectl apply -f -

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-internal-service-calls

spec:

podSelector:

matchLabels:

run: curl #source pod label

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

app: nginx #target pod label

ports:

- protocol: TCP

port: 80

- to:

ports:

- protocol: TCP

port: 53

- protocol: UDP

port: 53

EOF

Test the internal and external connectivity from the curl pod.

Based on the above test results, it is evident that the combination FQDNNetworkPolicy and NetworkPolicy enables efficient management of pod egress communication.

Conclusion

The FQDN egress filtering feature in GKE Dataplane V2 enhances network security and governance by empowering administrators to define policies for outbound communication, ensuring a more secure and compliant Kubernetes environment.

Preview offerings are intended for use in test environments only and follow the GKE release notes for the GA announcement.