BLUF

There’s a convention within the Kubernetes Community where things built into Kubernetes reference kubernetes.ioin their naming, Kubernetes Add-ons don’t. Add-ons tend to follow a self-documenting convention like ebs.csi.aws.comwhere you can intuitively determine it’s an add-on. The aws-load-balancer-controller add-on breaks this standard convention.

Target Audience

This article has three intended audiences/purposes:

1. Kubernetes Admins running EKS or Generic Kubernetes Distros on AWS, you don’t need to read this, but I’d recommend you bookmark it and make a mental note that it exists as reference material to revisit if you ever need to debug AWS Load Balancer Provisioning using Kubernetes Services with annotations like service.beta.kubernetes.io/aws-load-balancer-*.

2. Kubernetes Nerds who like deep-dive articles that help them better understand Kubernetes.

3. Maintainers of EKS, aws-cloud-controller-manager, aws-load-balancer-controller, and AWS Docs: It’s my intent for this article to shine a light on a confusing inconsistency that is unique to Kubernetes Load Balancer Provisioning on AWS. I’m hoping that it will encourage change toward consistency with the rest of the Kubernetes Community. (The issue crosses several git repos and documentation sites, so it’s not easily solved by a few merge requests.)

Introduction

I recently started on a proof of concept that involved provisioning AWS Load Balancers for an EKS(Elastic Kubernetes Service) Cluster using Kubernetes services of type LoadBalancer with annotations of the form service.beta.kubernetes.io/aws-load-balancer-*which are listed on this page.

Initially, some annotations didn’t work as expected. I was able to troubleshoot them, but an item of note is that to troubleshoot I had to understand why it wasn’t working. The docs on the linked page do a decent job of explaining how things work, but the explanations aren’t very beginner-friendly.

So this post will act as a deep dive explanation of several topics:

- Why the aws-load-balancer-controller is confusing

- Background contextual information that will help get beginners up to speed

- Details about why things work the way they work

- Some basic but useful troubleshooting tips

- Ideas about how various maintainer groups could make this less confusing in the future

Challenge: A case of Mistaken Identity

I mentioned I don’t find the above linked page to be very beginner-friendly. To clarify, I found the project to be confusing enough that it took me a day to wrap my head around it. That’s a bad sign since I’ve been a Kubernetes SME since July 2018. After figuring it out my first thought was “O that’s what’s going on. TIL (Today I learned) there are two different AWS Specific Kubernetes Controllers that can end up using annotations attached to Kubernetes Services of Type Load Balancer to provision and manage AWS LBs.” My second thought was “wow… How are other people supposed to figure this out in the absence of an expert? I should write an article about this.”

Here’s a list of items that make the AWS Load Balancer Controller Project confusing:

1.) It looks like official baked-in functionality, but it’s not!

When I did a google search for “aws service of type lb”

The first result: docs.aws.amazon.com/eks/latest/userguide/network-load-balancing.html

The second result: kubernetes-sigs.github.io/aws-load-balancer-controller/v2.4/guide/service/annotations/

And the relevant section of the official Kubernetes Docs, which is the first result when you search “kubernetes service of type loadbalancer”

https://kubernetes.io/docs/concepts/services-networking/service/

All three of these pages look like official documentation, and they all look like they’re talking about the same topic because the same annotations show up on all three pages.

If you control f all three pages for:

service.beta.kubernetes.io/aws-load-balancer-additional-resource-tags

The same resource shows up on all three pages. Which makes you think at first glance that all three sites are talking about the same thing.

In reality, the aws-load-balancer-controller is a Kubernetes Add-on that extends the built-in functionality. So two of the above links are covering built-in functionality, and a third is an add-on intended to offer extended Functionality.

2.) The naming convention used by the project’s website and annotations is really unintuitive. It tricks you into thinking the annotations reference baked-in functionality:

2A.) The DNS name where the project’s doc are hosted is kubernetes-sigs.github.io

that makes it look like it could be built-in Kubernetes Functionality.

2B.) The add-on’s annotations reference service.beta.kubernetes.io, which is usually reserved for baked-in functionality.

3.) The home page of the project’s documentation site doesn’t make it immediately obvious that this is a Kubernetes Add-On intended to extend built-in functionality.

It’s not until you get near the bottom of the second page of the docs

https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.4/deploy/installation/#add-controller-to-cluster

and spot helm install aws-load-balancer-controllerthat your brain goes, “Ooooh! This is an add-on, ok, that makes sense.”

4.) The pattern the API uses is inconsistent with the normal pattern used by the larger Kubernetes Community:

I’ll use Cert Manager and EBS Volume CSI (Container Storage Interface) Driver as two examples of normal patterns:

- In the case of cert-manager’s docs, 95% of their annotations use

cert-manager.io. This makes it intuitively obvious at a glance that the annotations refer to a third-party add-on. - The first sentence of the docs landing page is “cert-manager adds certificates and certificate issuers as resource types in Kubernetes clusters…”, this helps clarify up front that the project is an extension that adds functionality.

- In the case of EBS Volume CSI Driver, a naming convention is used that helps clearly distinguish intuitively at a glance that it is different from the built-in functionality.

The baked-in AWS Storage Class uses provisioner: kubernetes.io/aws-ebs

The EBS Add-on Storage Class uses provisioner: ebs.csi.aws.com

- It’s very common for initial landing pages of multiple EBS CSI documentation sites to repeatedly make it clear up front that it’s an add-on that’s not installed by default. This project’s doc even goes as far as purposefully including the keyword “driver” to make this more intuitive: “The Amazon EBS CSI driver isn’t installed when you first create a cluster. To use the driver, you must add it as an Amazon EKS add-on or as a self-managed add-on.” Also in the event people land on the GitHub page rather than the docs, even the project’s GitHub landing page, has a link to “Driver Installation”

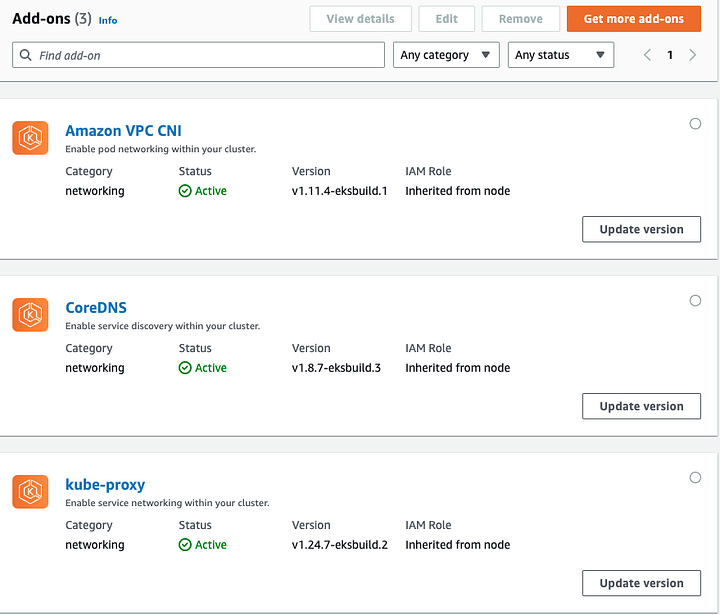

5.) AWS’s UI and docs are also inconsistent when it comes to the aws-load-balancer-controller:

AWS Load Balancer Controller has a user guide in docs.aws.amazon.com titled “Installing the AWS Load Balancer Controller add-on”

But if you look in the GUI it doesn’t show up as an official add-on, not even when you click Get more add-ons and see Amazon EBS CSI Driver & AWS Distro for OpenTelemetry

6.) The project lacks background contextual information

The service annotation page of the docs briefly mentions two topics that are both useful to understand (as understanding aids troubleshooting) and near impossible for a newcomer to wrap their head around without additional explanation. If you try to google the topics the information you’ll find about them is very vague, fragmented, and seemingly contradictory unless you’re able to pick up on lots of nuances.

The two topics I’m referring to that are briefly mentioned without explanation in the docs are:

1. “in-tree”: “the k8s in-tree kube-controller-manager”

2. “Legacy AWS Cloud Provider”: “The AWS Load Balancer Controller manages Kubernetes Services in a compatible way with the legacy aws cloud provider.”

In an ideal world, there’d be a summarized definition of what the words mean and a link to additional reading for anyone interested. Here’s how I’d summarize them: They’re technically two different things, but they may as well be the same thing. The Legacy AWS Cloud Provider is what provides the default built-in functionality of provisioning services of type Load Balancer for Kubernetes Clusters running on AWS.

Background Contextual Information

Whenever I’m troubleshooting something, the first thing I do is try to understand it. The topic of provisioning AWS LBs requires a lot of background contextual information to fully understand. Unfortunately, before today this information was scattered, never explained in depth, and full of nuances that make it difficult to wrap your head around. This is my attempt at consolidating a few key pieces of background contextual information and making them easy to understand.

Background Context of Key Topics:

1.) kube-controller-manager vs cloud-controller-manager vs aws-cloud-controller-manager

There are nuances to what “in-tree” and “Legacy AWS Cloud Provider” refer to that are hard to understand without understanding background context around these related topics, so we’ll start here.

In the past, Kubernetes had four control plane components: The controller-manager, scheduler, api-server, and etcd. You’d run either kube-controller-manager or cloud-controller-manager, not both.

The cloud-controller-manager is the option that was used 95% of the time. It had the same functionality as the kube-controller-manager, plus baked-in support for interfacing with multiple CSP (Cloud Service Provider) APIs. Since it knew how to talk to CSP APIs it could do things like provision AWS EBS volumes and AWS Load Balancers.

The kube-controller-manager is a cloud-agnostic implementation, so it lacks the baked-in ability to do things like auto-provision CSP Storage and CSP Load Balancers.

Present-day Kubernetes now normally has five control plane components:

etcd, kube-api-server, kube-scheduler, kube-controller-manager, and a CSP specific controller manager like aws-cloud-controller-manager. Diagrams within the official Kubernetes docs were updated to reflect this new architecture. You can also verify this by using deployment methods like kops or kubeadm which let you see the control plane. The main change is that now you have cloud controller managers dedicated to a single cloud service provider, and they’re intended to be run alongside the kube-controller-manager. Whereas the original cloud-controller-manager was more of a universal all-in-one solution.

2.) “In-tree” refers to the time when Go Lang libraries that understood how to interface with multiple CSP APIs were in the Kubernetes repo to build a Universal Kubernetes Cloud Controller Manager Container Image:

In-tree: code that lives in the core Kubernetes repository k8s.io/kubernetes.

Out-of-Tree: code that lives in an external repository outside the k8s.io/kubernetes git repo.

Kubernetes 1.14’s code base had APIs for 8 different CSPs baked in. More wanted to be added, and the fact that they were all bundled together meant they all had to achieve stability at the same time for a release. It was an unsustainable pattern from a maintainability standpoint. To address the issue, a KEP (Kubernetes Enhancement Proposal) was approved. It proposed CSP functionality be decoupled from Kubernetes and refactored into add-ons.

This decoupling of functionality would simplify the Kubernetes release process and allow cloud providers to release features and bug fixes independently of Kubernetes’ release cycle and processes. It was a decision to pay down technical debt to ensure the long-term health of the project.

3.) If you try to research this topic on your own, it’s easy to find instances of information that at first glance seems to be conflicting information, until you pick up on multiple nuances. Here are a few nuances listed in no particular order:

-

- It looks like the migration from in-tree to out-of-tree is taking years. There’s also lots of information that suggests it started long ago and finished long ago. That it started and finished at different times. And that it’s still ongoing.

- Earlier I mentioned Kubernetes 1.14’s code base had APIs for eight different CSPs.

If you look at Kubernetes 1.26’s code base, you’ll still see four different CSPs in-tree. AWS still shows up in the list of in-tree CSPs. But there’s an out-of-tree git repo referencing the aws-cloud-provider that makes it sound like AWS finished their migration back in 1.20.

But the KEP suggests the migration will finish by about version 1.27?

Stuff like this is why I chose to write an article about this rather than contribute to fixing an issue with docs. Although AWS and other CSPs still exist in-tree/in a universal CSP version of cloud controller manager, that version is not what is used in practice. AWS and other CSPs use their own CSP-specific implementation like aws-cloud-controller-manager vs the older in-tree universal all-in-one cloud-controller-manager.

Different CSPs have been finishing their migration from the universal in-tree to the CSP-specific out-of-tree at different rates. Another thing that’s a little confusing is that this complex effort was split into two parts. The original in-tree baked-in functionality included logic to provision CSP storage and CSP LBs. Not only was that taken out-of-tree, it was further decoupled so that CSI drivers for CSP storage became its own isolated component with its own separate migration timelines.

- When you realize the scale of complexity involved in that refactor a few things start to make sense. First, it’s no wonder the refactor has taken over 4 years. Out-of-tree cloud provider implementations feature achieved beta status in version 1.11, back in May 2019. Second, when you realize there were multiple feature freezes implemented to support the migration process, it starts to make sense why things like bugs associated with implementing

ExternalTrafficPolicy: Localon AWS LBs took years to fix. It also explains why there hasn’t been many feature enhancements to the baked-in AWS LB provisioning logic for years.

4.) “Legacy AWS Cloud Provider” basically refers to the default load balancer provisioning functionality that you have access to in a default installation of EKS or Kubernetes on AWS.

There are a few nuances that prevent the above statement from being 100% accurate, but it’s close enough to the truth to be useful.

The nuances are as follows:

- Legacy AWS Cloud Provider can refer to the AWS Specific Logic that existed in-tree, in the (universal CSP) cloud-controller-manager, that could provision AWS EBS Volumes and AWS LBs.

- Legacy AWS Cloud Provider can also refer to the default load balancer provisioning logic that’s present in aws-cloud-controller-manager.

- aws-cloud-controller-manager is often invisible to users.

– If you use EKS aws-cloud-controller-manager it’s running on the managed master nodes that you can’t see.

– If you use kops or kubeadm which use self-hosted master nodes, you’ll see it.

– If you use RKE2 you won’t see it because of some defense in-depth security implementation details they use to run certain control plane components as processes isolated from Kubernetes. - Legacy AWS Cloud Provider can refer to two different things, but in the context of AWS LB’s they’re effectively the same thing as they support the same annotations. You can find the original 22 annotations by searching this page for the string

const ServiceAnnotationLoadBalancer. Note at the time of this writing, if you search the stringservice.beta.kubernetes.io/aws-load-balanceron the docs page you’ll only see 21 annotations. That’s just an issue with the docs, not a code change.

Here’s a list of the 22 annotations you have access to by default:

service.beta.kubernetes.io/aws-load-balancer-type service.beta.kubernetes.io/aws-load-balancer-internal service.beta.kubernetes.io/aws-load-balancer-proxy-protocol service.beta.kubernetes.io/aws-load-balancer-access-log-emit-interval service.beta.kubernetes.io/aws-load-balancer-access-log-enabled service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-name service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-prefix service.beta.kubernetes.io/aws-load-balancer-connection-draining-enabled service.beta.kubernetes.io/aws-load-balancer-connection-draining-timeout service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled service.beta.kubernetes.io/aws-load-balancer-extra-security-groups service.beta.kubernetes.io/aws-load-balancer-security-groups service.beta.kubernetes.io/aws-load-balancer-ssl-cert service.beta.kubernetes.io/aws-load-balancer-ssl-ports service.beta.kubernetes.io/aws-load-balancer-ssl-negotiation-policy service.beta.kubernetes.io/aws-load-balancer-backend-protocol service.beta.kubernetes.io/aws-load-balancer-additional-resource-tags service.beta.kubernetes.io/aws-load-balancer-healthcheck-healthy-threshold service.beta.kubernetes.io/aws-load-balancer-healthcheck-unhealthy-threshold service.beta.kubernetes.io/aws-load-balancer-healthcheck-timeout service.beta.kubernetes.io/aws-load-balancer-healthcheck-interval

5.) The AWS Load Balancer Controller Project is conceptually similar to the AWS EBS CSI Driver Project:

Both represent the decoupling of Kubernetes Controller Logic that used to exist in-tree in the universal all-in-one cloud-controller-manager. They both had the common aim of ensuring backward compatibility. Part of me wonders if this played a part in the project’s decision to reuse some annotations.

6.) The EBS CSI Driver Add-on and the AWS LB Controller Add-on projects were started by different groups.

The EBS CSI Driver Add-on Project was started by AWS. The AWS LB Controller Add-on Project was originally started by Ticketmaster and CoreOS and was formerly known as “AWS ALB Ingress Controller”. It was donated to Kubernetes SIG-AWS in 2018. The ALB Ingress Controller originally was best described as an Application Load Balancer Controller. After a while, the project’s scope expanded to cover controlling NLBs as well. So in July 2021, the project was rebranded to “AWS Load Balancer Controller.”

Why are things the way they are?

If the logic of the all-in-one universal cloud-controller-manager hadn’t been migrated out-of-tree into decoupled external add-ons, then tech debt would have permanently slowed down all future feature development and bug fixes. I remember a time when bugs related to externalTrafficPolicy: local, kept recurring and took years to get properly fixed. Now that the logic is decoupled from the code, release process, and testing of the official Kubernetes project. Bugs now get fixed in months vs years, and feature requests can start to be considered again.

Faster development has led to great improvements like making the concept of a Software Defined Perimeter via Authn/z Proxy easier to implement thanks to new integration of AWS Cognito with ALB provisioning logic. The AWS LB Controller project also has more options when it comes to provisioning NLBs via annotations. One new feature I particularly like is the service.beta.kubernetes.io/aws-load-balancer-nlb-target-type:annotation that allows you to pick how you’ll route traffic to backend pods. The default value "instance"makes it so traffic goes from nELB -> NodePort -> Kube service -> Pod. A newly added value "ip"makes it so traffic can go nELB -> Pod, similar to how aELB works.

Shorthand Reference:

nELB = network Elastic Load Balancer (L4 LB as a service)

aELB/ALB = application Elastic Load Balancer (L7 LB as a service)

cELB = classic Elastic Load Balancer (L4/L7 LB as a service)

I suspect part of the reason why the scope creep of bringing nELBs management into the project formerly known as ALB Ingress Controller occurred was that the ALB Ingress Controller project was probably more accepting of changes and new features during a time when the aws-cloud-controller-manager had a feature freeze to assist with the in-tree migration.

As far as why isn’t AWS Load Balancer Controller more like the AWS EBS CSI Driver Add-on? I think it has to do with the fact that AWS had control over the EBS CSI project from start to finish. Whereas the LB Controller project was adopted, and big organizations tend to implement changes slowly due to Brooks’s law. (Communications overhead in large organizations significantly slows down changes.)

AWS LB Controller troubleshooting tips

These troubleshooting tips are not meant to be extensive or exhaustive. These tips intend to be just useful enough to get you unstuck and point you in the right direction toward useful topics to do follow-up research on.

1.) Determine if AWS LB Controller is installed

kubectl get deployments --namespace=kube-system

The reason this is important is that this can cause significant changes in behavior. If you have two clusters with 99% of the same YAML workloads and configuration deployed and the 1% difference between them is if AWS LB Controller is installed or not. The 99% similar yaml can produce different results.

2.) Strongly consider updating to the newest version

You can use the following command to see which version you’re running

kubectl get deploy aws-load-balancer-controller -n=kube-system -o yaml | grep image:

image: public.ecr.aws/eks/aws-load-balancer-controller:v2.4.6

Here’s a recent real-world scenario where the practice of staying up to date was useful:

EKS 1.21 went End of Life on Feb 15th, 2023. A few users of AWS LB Controller experienced outages, until they updated from 2.3.x → 2.4.x. The outages were due to the Kubernetes 1.21 to 1.22 upgrade removing many deprecated APIs. AWS LB Controller 2.4.x supports the newer networking.k8s.io/v1Ingress API. 2.3.x only supports the older API networking.k8s.io/v1beta1which was removed from Kubernetes 1.22. The aws-lb-controller project’s issue tickets noticed this would be a problem and made it so 2.4.x versions would work with Kubernetes 1.19++, which gave orgs time to migrate. Those orgs that follow the best practice of keeping their add-ons up to date avoided outages. Other orgs that do minimum maintenance likely ran into this issue after eventually updating Kubernetes to stay on a supported release.

3.) Do a through end-to-end read through all the installation docs, it’s easy to miss some requirements:

In addition to installing the aws-load-balancer-controller in the kube-system namespace, and correctly setting up IAM roles, you also have to tag the VPCs subnets correctly.

# Snippet of Terraform VPC Config as Code

module vpc {

...

public_subnet_tags = {

"kubernetes.io/role/elb" = "1"

}

private_subnet_tags = {

"kubernetes.io/role/internal-elb" = "1"

}

}

/*

Additional Background Contextual Info:

If you look at the EKS docs

https://aws.amazon.com/premiumsupport/knowledge-center/eks-load-balancer-controller-subnets/

You'll see a reference to

"kubernetes.io/cluster/${local.cluster_name}" = "shared"

That tag used to be required by older versions of aws-load-balancer-controller

*/

4.) If you need to debug an ingress object, then check what Ingressclasses are installed

kubectl get ingressclass

If you see more than one, you should run kubectl describe ingressclassand see if one of the ingress classes has an annotation marking it as the default.

If the alb ingress class isn’t marked as default, then you should explicitly list your desired ingress class on the ingress object you’re trying to debug.

5. If you need to debug LB provisioning annotations specific to service objects, things are a bit more complicated…

How it’s complicated:

You’d effectively have two different controllers running in the same cluster at the same time.

In the case of EKS, since it’s a managed service/has managed Kubernetes Master Nodes, you can only visually see one of the two controllers. (You won’t see aws-cloud-controller-manager as it’s running on the managed master nodes.)

The two controllers have lots of overlap in terms of feature functionality and objects they’re responsible for:

– aws-cloud-controller-manager: provisions cELBs and nELBs

– aws-load-balancer-controller: provisions aELBs and nELBs

5A.) Determine which controller is actively managing your Kubernetes service object.

Per this doc, if you annotate a Kubernetes service with either of these options:

service.beta.kubernetes.io/aws-load-balancer-type: nlb-ip

service.beta.kubernetes.io/aws-load-balancer-type: external

Then aws-cloud-controller-manager controller will ignore the object so aws-load-balancer-controller can manage the object.

5B.) If aws-load-balancer-controller is managing a service, kubectl describe service becomes useful!

Many who tried advanced LB options have probably run into a scenario where LB is stuck in status pending and refuses to provision. Let’s say you run kubectl describe service $NAMEto debug it. If Legacy AWS Controller Manager is managing the service, you’ll likely get a useless error message. If aws-load-balancer-controller is managing the service, you’ll actually get error messages that are useful for debugging. (They’re great at helping to point out if you messed up an installation step like missing IAM rights, or missed the part about tagging subnets.)

5C.) Skim release notes to review any changes that need to be accounted for:

- aws-cloud-controller-manager defaults to provisioning LBs with public IPs. You need to add config to provision Private IPs. The reverse is true for aws-load-balancer-controller. (since v2.2.0)

- aws-cloud-controller-manager defaults to provisioning cELBs. aws-load-balancer-controller defaults to provisioning nELBs (for any annotated with

service.beta.kubernetes.io/aws-load-balancer-type: externalsince that controls which controller is in charge.) - aws-cloud-controller-manager’s container images used to be available on Docker Hub, but starting on v2.4.6, they’ll only be hosted on public.ecr.aws container registry going forward

5D.) Most Kubernetes objects support reconciliation loops that transition the current state to the desired state. Load Balancer controllers tend to have a few edge cases where you need to delete and recreate for iterative changes to take effect.

This isn’t usually needed, but sometimes it’s worth a try, the annotation in 5A is an example where recreation is recommended over modification by the docs. It’s also worth pointing out that the values nlb-ipand externalare specific to the AWS LB Controller, the Legacy Controller uses the values nlb and (blank will provision a cELB). This gotcha could be important to anyone using a GitOps controller like ArgoCD or Flux to do iterative changes, as those tend to update manifests rather than delete and recreate resources. So if an ArgoCD or Flux user were testing iterative changes in a dev environment, they may need to do manual intervention in this edge case to see their changes implemented.

Conclusion

I had two main reasons for writing this. The first was to share knowledge and that part is done. The second was to help encourage changes that could help make this less confusing. There are three changes that project and documentation maintainers could make that would go a long way in helping to clear up confusion. They all involve making aws-load-balancer-controller more like the EBS-CSI project:

- Add the project to EKS’s list of official add-ons that are installable via the AWS Console GUI.

- Update docs in multiple places to make it immediately obvious that the aws-load-balancer-controller is an add-on.

- Update the annotations from referencing

kubernetes.ioto be more likeebs.csi.aws.com. This as it makes the add-on status obvious by inspection and has an SEO (search engine optimization) effect that would improve the user experience of looking up relevant docs.

One Response

Everything makes more sense after reading this. Thank you for taking the time to write this.