Google Cloud FinOps Best Practices: A Practical Framework

Cloud cost optimization has gone well beyond simple rightsizing recommendations. As organizations grow their Google Cloud deployments, the focus shifts from spotting obvious inefficiencies to understanding how workload goals, infrastructure design, and costs all connect. Traditional FinOps approaches often fall into what we call the “illusion of efficiency,” where surface-level metrics like CPU utilization suggest optimal performance while masking deeper architectural waste.

This illusion happens because traditional monitoring focuses on infrastructure metrics rather than workload outcomes. For example, a BigQuery job showing 90% slot utilization appears efficient until you discover it’s scanning entire tables due to missing partitions, burning through compute unnecessarily. Similarly, a Kubernetes cluster with high CPU usage might seem well-optimized while pods actually queue due to memory constraints or poor resource allocation.

These (and other) scenarios create a false sense of efficiency where busy infrastructure masks fundamental design problems that drive real costs.

Unlike standard FinOps that optimizes based on generic utilization metrics, intent-aware optimization considers the purpose behind each workload. For instance, a machine learning training job may appear “underutilized” during data preprocessing but is actually performing optimally for its pipeline stage.

Whether you’re managing latency-sensitive applications that need extra capacity for SLA compliance, ensuring failover guarantees with redundant infrastructure, or optimizing for developer velocity over cost savings, your cloud operations must adapt to each scenario. The following strategies can help you build a more sustainable cloud cost management approach to Google Cloud financial operations that grows along with your organization.

What is Google Cloud FinOps?

Google Cloud FinOps is an operational framework that brings financial accountability to cloud spending through cross-functional collaboration between engineering, finance, and business teams. Instead of being reactive in nature and considering cost optimization as an afterthought, FinOps focuses on ongoing, proactive practices to understand, track, and optimize cloud spending in real time.

Per the FinOps Foundation, the discipline centers on three core phases:

- Inform (visibility into spending patterns)

- Optimize (actionable cost reduction)

- Operate (ongoing governance and accountability)

Within Google Cloud’s ecosystem, this translates to using native tools like Cloud Billing, Cloud Asset Inventory, and Recommender API while integrating with specialized platforms like DoiT that provide deeper workload-specific insights.

What is the FinOps Score on Google Cloud?

Google Cloud’s FinOps score helps you gauge how well your organization is managing cost optimization across different areas. The score is calculated based on your adoption of Google’s recommended practices: commitment coverage percentages, idle resource cleanup rates, and budget alert configuration completeness. You’ll find it in the FinOps Hub dashboard, where it’s updated monthly and serves as a starting point for quarterly optimization reviews.

However, the native FinOps score focuses primarily on Google’s recommended best practices without considering the nuanced requirements of your specific workloads. For instance, a machine learning training job that appears “underutilized” during data preprocessing phases may actually be operating optimally for its intended purpose. This is where intent-aware analysis becomes important for accurate financial assessment.

Why FinOps matters for Google Cloud

Google Cloud’s pricing model rewards strategic planning through committed use discounts, sustained use discounts, and preemptible instances. However, these benefits require sophisticated forecasting and workload analysis to achieve maximum value. Organizations that implement structured FinOps practices typically see cost reductions within the first year, not just through resource downsizing but through architectural improvements that eliminate design-level waste.

Google Cloud’s services introduce unique optimization challenges. For example, BigQuery's slot-based pricing means query performance and cost depend heavily on data structure and partition strategy, making it difficult to predict costs without deep SQL expertise. Similarly, Cloud Run’s per-request billing creates optimization complexity where cold starts can dramatically impact both performance and costs, requiring careful concurrency and minimum instance tuning.

These pricing models shift optimization from simple resource sizing to architectural decision-making. Without proper FinOps processes, engineering teams often default to oversized resources to avoid performance risks, while finance teams lack the technical context to identify legitimate optimization opportunities within these service-specific constraints.

Essential principles of Google Cloud FinOps

Successful Google Cloud FinOps is built on several principles that set mature practices apart from quick, ad-hoc cost-cutting measures. Real-time cost insights form the backbone of any successful program, though in practice, “real time” often means hourly or daily cost attribution, since Google Cloud billing data has inherent delays. These insights require automated data collection that correlates spending with business metrics rather than just infrastructure utilization.

Stakeholder accountability makes cost optimization a shared responsibility, not just billing data for the finance team to worry about. This involves setting up clear ownership models, so engineering teams see how their architectural decisions affect costs, and business units can directly link cloud spending to revenue.

Cross-functional collaboration helps break down barriers between teams that usually work in isolation. When storage engineers understand the downstream impact of data retention policies on analytics costs, or when application developers recognize how their code patterns affect Cloud Functions pricing, optimization becomes a natural outcome of informed decision-making. Having a dedicated FinOps team can ultimately help streamline your cloud financial management strategy.

What FinOps metrics should you be tracking?

Tracking the right FinOps metrics is necessary for gaining visibility into cloud spending and driving cost optimization efforts. Here are some of the most important ones.

Cost allocation accuracy

Precise cost allocation forms the foundation of effective FinOps governance. Google Cloud’s labeling and project structure needs to align with your organizational hierarchy to enable accurate chargeback and showback reporting. Make sure to implement mandatory labeling policies that cover environment, team, application, and cost center details for all resources.

Set up automated reports in BigQuery to track spend by label, such as department, project, or environment, reviewing monthly to ensure accuracy and completeness. Use Cloud Asset Inventory queries to validate label coverage at scale by creating weekly reports showing the percentage of untagged resources by service type and set coverage targets (aim for 95%+ tagged resources). If discrepancies are found, follow up with the relevant department to correct tagging or investigate unexpected spend.

Accurate cost allocation is fundamental for financial accountability and transparency in cloud spending. Without it, cloud expenses become a single, opaque line item, making it impossible to determine which teams, projects, or business units are driving costs. This lack of visibility hinders efforts to optimize spending and hold stakeholders accountable.

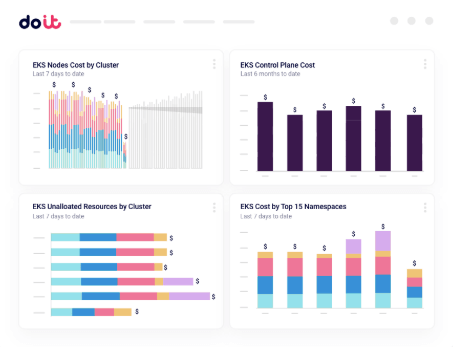

Utilization rates and efficiency metrics

Traditional utilization metrics like CPU, memory, and storage consumption provide only surface-level insights into efficiency, measuring how much capacity is used, but not whether that usage delivers intended business outcomes. Intent-aware optimization goes beyond efficiency to examine whether your workloads achieve their performance objectives at optimal cost, considering factors like latency requirements, failover capabilities, and batch processing windows.

For example, a Kubernetes cluster showing 40% average CPU utilization might seem inefficient from a pure efficiency standpoint until you analyze that it’s designed to handle traffic spikes with sub-200ms response times. The “unused” capacity represents engineered headroom needed for meeting SLA commitments, making it a design feature rather than waste—even if it’s not fully utilized.

Commitment coverage and discount optimization

Google Cloud’s committed use discounts (CUDs) and sustained use discounts require careful forecasting and workload analysis to maximize value. Track your coverage ratios across different resource types and regions, identifying opportunities to increase commitment levels without compromising flexibility. In dynamic environments, start with conservative commitments at 70%–80% of baseline usage to avoid overcommitment penalties when workloads fluctuate unexpectedly.

Use Active Assist or the Recommender API to spot VMs that are consistently underused, then set up a review with engineering to either rightsize or decommission them. Monitor commitment utilization weekly. If usage drops below 85% of committed capacity for multiple weeks, consider reducing future commitments or shifting workloads to maximize existing ones. Regular check-ins on resource usage help ensure you’re paying only for what you actually need, boosting your cloud ROI. Aim to schedule these reviews at least once a quarter.

Cost per business unit and application

Establish clear cost attribution models that connect cloud spending to business outcomes. This means tracking cost per customer, per transaction, or per revenue dollar, depending on your business model. Doing so enables better-informed decisions about feature investments and helps justify cloud spending to executive stakeholders.

Set up automated reporting to link application performance with infrastructure costs to give you a clear view of your cloud operation’s efficiency. Applications that deliver high business value while maintaining cost discipline become your go-to models for organization-wide best practices.

Anomaly detection and budget variance

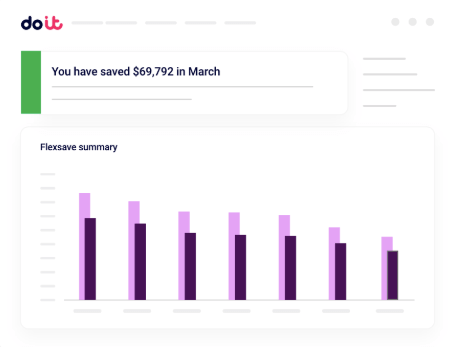

Automated anomaly detection identifies unexpected cost increases before they impact monthly budgets. Google Cloud’s budget alerts provide basic monitoring. However, sophisticated FinOps requires predictive analytics that consider usage patterns, seasonal variations, and planned infrastructure changes—capabilities offered through platforms such as DoiT.

Establish escalation procedures that balance rapid response with operational stability. Escalate immediately for unplanned spikes exceeding 20% of daily average or any increase without corresponding business activity. Ignore planned increases from scheduled batch jobs, load testing, or known deployments, but be sure to verify these against your change calendar. Cost spikes during business hours with normal application performance typically indicate scaling events and require monitoring, not immediate action.

Set up budget alerts and anomaly detection in Google Cloud Billing to stay on top of your cloud spend. When an alert is triggered, make sure your finance and engineering teams review it right away. Anomaly detection gives you real-time insights into unexpected spikes or drops in spending, so you can catch errors, misconfigurations, or unplanned usage before things get out of hand. Acting quickly on these alerts helps prevent budget issues and highlights potential operational problems, so assign a finance analyst and an engineering lead to work together on monitoring and responding to alerts.

5 keys to Google Cloud FinOps success

Succeeding in Google Cloud FinOps takes a smart strategy that brings together financial management and technical operations. Here are five keys to success:

1. Tag resources effectively

Comprehensive resource tagging enables granular cost analysis and automated optimization. Use hierarchical labeling strategies to reflect your organization’s structure, application taxonomy, and operational metadata. Good label categories to include are environment type, team ownership, application ID, and cost center allocation.

Use Google Cloud Organization Policy Service to automatically block the creation of untagged resources, though enforcement coverage varies. For instance, Compute Engine and GKE support mandatory labeling, while some services—like Cloud Functions and BigQuery datasets—have limited enforcement capabilities. For services without policy enforcement, use Cloud Asset Inventory to set alerts for newly created untagged resources. Schedule monthly audits to spot and fix any that slip through.

2. Adopt commitments and discounts

Effectively optimizing Google Cloud pricing means taking a close look at workload patterns and growth projections. While committed use discounts provide significant cost savings for predictable workloads, they require accurate forecasting to avoid overcommitment penalties.

Analyze your usage patterns across multiple dimensions (resource types, regions, time periods) to identify optimal commitment strategies. Watch for overcommitment risk indicators like usage dropping below 80% of committed capacity for two consecutive months, upcoming project sunsets affecting baseline workloads, or significant architecture changes that could reduce resource requirements. Start with shorter-term commitments and gradually increase duration as your forecasting accuracy improves. Then, analyze historical usage with BigQuery billing export to identify workloads for committed use discounts (CUDs). Start with a one-year CUD for baseline workloads and expand as forecasts improve. Then, reassess commitments quarterly to adapt to changing demands.

Taking the first step with cloud savings requires balancing immediate cost reductions with operational flexibility.

3. Engage in regular cross-team reviews

Monthly cost reviews should involve engineering, finance, and business stakeholders to ensure optimization efforts align with business objectives. These reviews should focus on analyzing trends, making the most of commitments, and spotting opportunities for optimization that need teamwork across different functions.

Create standardized dashboards in a program like Looker or FinOps Hub to review cost trends, optimization actions, and budget variances. These reports should turn technical metrics into clear business terms, making it easier for technical and financial teams to have productive conversations. Motivating preoccupied engineers requires demonstrating how cost optimization enhances system reliability and performance rather than constraining resources. Otherwise, it can make them feel like they’re being pushed into processes for no transparent reason.

4. Automate idle resource cleanup

Set up automated lifecycle management to handle development and testing environments that tend to build up unused resources. Use Cloud Scheduler and Cloud Functions to automatically shut down non-production workloads during off-hours and clean up resources that go beyond their retention periods. For instance, set a policy to automatically delete test VMs older than 30 days unless tagged for retention. Review cleanup logs monthly to ensure automation is functioning, and adjust rules as needed.

Advanced automation includes intelligent shutdown policies that consider application dependencies and backup requirements. For example, database instances might require backup completion before shutdown, while development environments can implement more aggressive cleanup schedules.

5. Benchmark against industry peers

Regular benchmarking provides context for your optimization efforts and identifies areas for improvement. Use the FinOps Hub’s peer benchmark score to compare your cost efficiency—including cost per workload, discount utilization rates, and operational efficiency metrics—against peer organizations and industry standards.

If your cost per workload is above the industry median, investigate whether this is due to higher performance requirements or inefficiencies. But try to steer clear of simple comparisons that overlook architectural differences or business needs. A higher cost per user could be justified if your application delivers superior performance or reliability compared to alternatives.

After benchmarking, set internal targets for improvement and assign owners to address gaps. Establishing a cloud cost optimization culture requires understanding these nuances and communicating value beyond only cost metrics.

Make a success of your FinOps initiative

Building sustainable Google Cloud FinOps requires more than just following a few best practices. It takes a cultural shift where cost consciousness becomes a natural part of both engineering and business decisions. This starts with clear governance frameworks that outline roles, responsibilities, and escalation processes for managing costs effectively.

Invest in specialized cost management tools that provide intent-aware analysis rather than surface-level metrics. The most sophisticated organizations move beyond traditional rightsizing recommendations to understand how architectural decisions impact both cost and performance outcomes.

Ultimately, success comes from knowing that good FinOps doesn’t limit innovation—the goal is to boost it. When teams understand the use cases and relationships between their technical choices and business outcomes, optimization becomes more of a creative challenge that drives both efficiency and performance improvements.

Learn how you can uncover hidden saving opportunities and reduce your Google cloud spend.