Blog

Introducing Automation for Argo Rollouts via PerfectScale

Argo Rollouts are widely used by modern engineering teams to ensure smooth, non-disruptive delivery, enabling them to move fast

9 Practical Steps to Turn AI Cost Chaos into Clarity

DOWNLOAD NOW GUIDE 9 Practical Steps to Turn AI Cost Chaos into Clarity How leading FinOps teams allocate, measure,

Building a production-ready Attack Surface Management Agent with AWS Bedrock while staying within budget with DoiT Cloud Intelligence™

The internet is vast and constantly growing. As of mid-2025, it hosts more than 1.25 billion websites and generates around 149 zettabytes

Introducing CloudFlow Policies: Smarter Cloud Governance Through Automation

Hi everyone. Craig from DoiT back again to show you a brand new capability in CloudFlow called Policies that

Introducing Kubernetes Intelligence: Deeper Visibility into Kubernetes Costs and Utilization

Hey everyone, I’m Craig Lowell from DoiT back again to share some exciting news about a new feature in

Visualize your Google Cloud infrastructure in real-time with Cloud Diagrams

Cloud Diagrams now supports Google Cloud, bringing automated infrastructure visualization to GCP environments. Teams can automatically map dependencies, troubleshoot network issues across projects, track infrastructure changes over time, and maintain always-current architecture documentation.

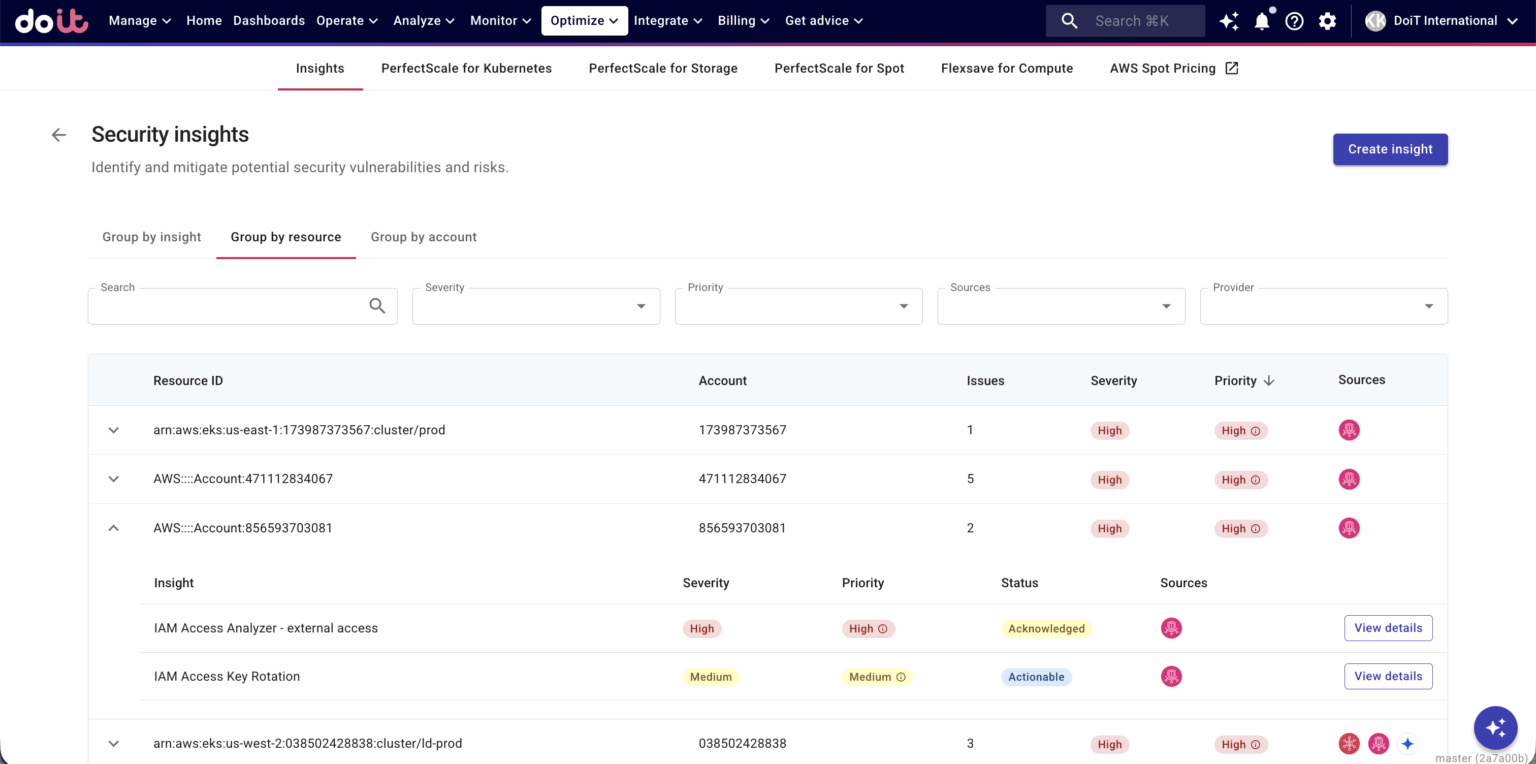

Introducing Security Insights in DoiT Cloud IntelligenceTM

Security teams are often overwhelmed by the volume of findings and alerts, and by the time it takes to

Kubernetes Intelligence by DoiT: Optimize Costs in AWS & GCP

The flexibility offered by Kubernetes environments carries inherent complexity, particularly when it comes to understanding how those Kubernetes workloads

Manage AWS EKS Extended Support Charges with DoiT CloudFlow

Hey, everyone. Craig Lowell from DoiT back again to walk you through another common cloud optimization task that can

Building AWS Architecture with MCP Servers and Strands Agents

Introduction In the rapidly evolving landscape of cloud architecture and AI-driven development, two powerful technologies are revolutionizing how we