Keeping up with BigQuery cost optimization best practices is daunting – but what if you had a tool that simply told you what to do?

Your BigQuery costs are going up — now what?

Sure, you could dig through audit logs for clues, or read through Google Cloud’s BigQuery Best Practices to better understand what might be optimizable.

But faced with a backlog of other projects and fires to put out, can you or your team really afford to devote the time required? From our experience, most of the time the answer is a resounding “No.”

Most companies we work with have just too many higher-priority product-related tasks and limited engineering resources to tackle BigQuery cost optimization. As a result, many build out their data infrastructure in BigQuery on the fly, only to run into the consequences of those decisions later on.

But what if you had a tool that simply told you what to do?

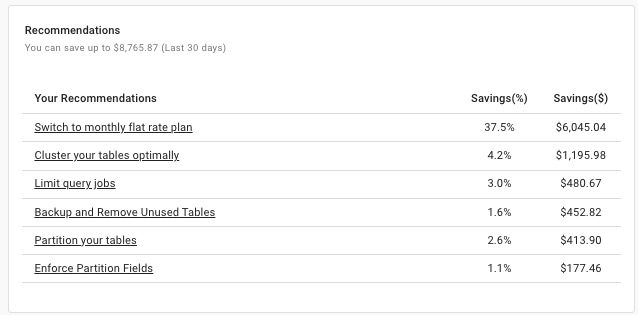

That’s why we built the BigQuery Lens, which delivers personalized recommendations leveraging BigQuery best practices and our own experiences helping thousands of customers optimize their BigQuery usage.

Let’s explore several BigQuery best practices and how you can implement them using BigQuery Lens.

“Thanks to the BigQuery Lens’ recommendations, we invested time in partitioning our key tables and were able to reduce our BigQuery expenses by 25% a month.”

- Daniel Rimon, Head of Data Engineering, Resident

How BigQuery Lens helps you optimize your costs

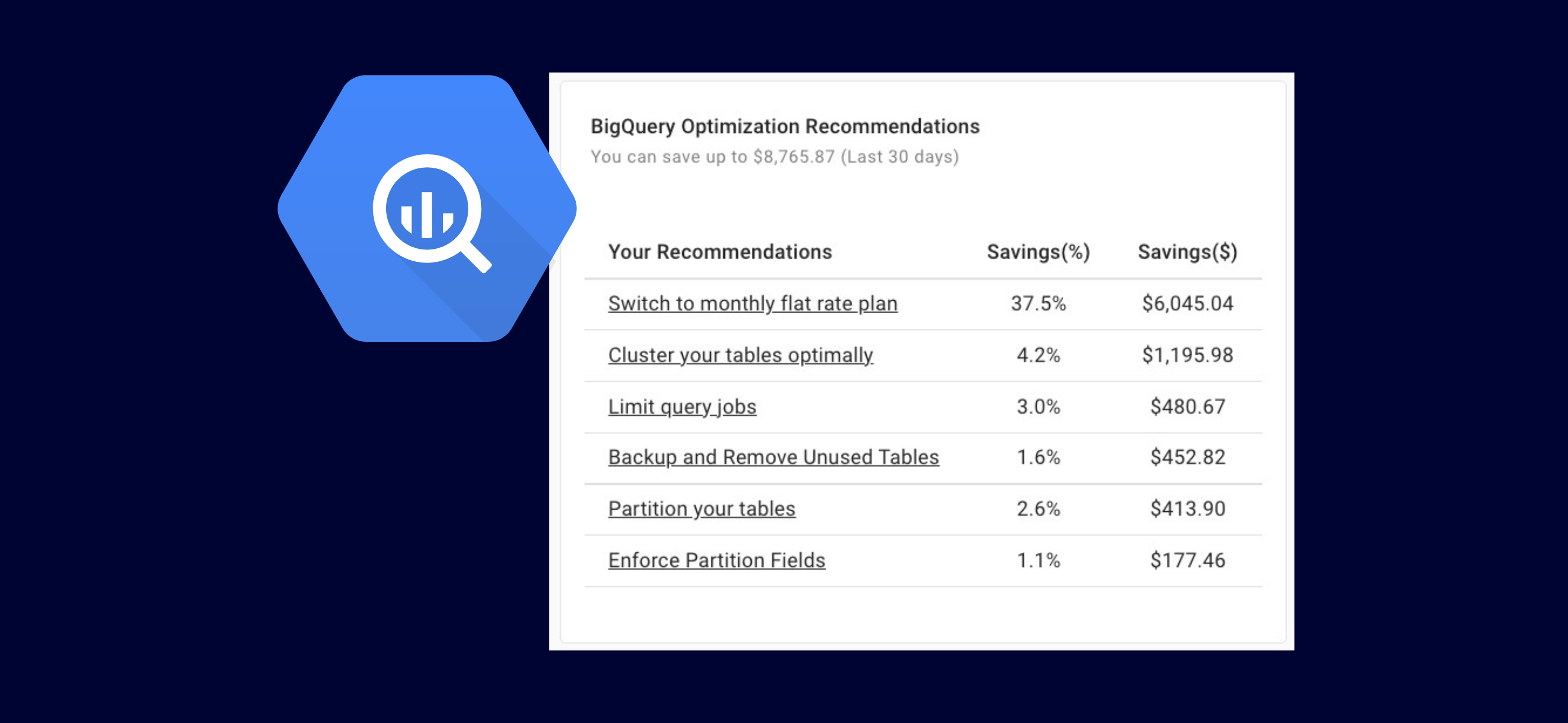

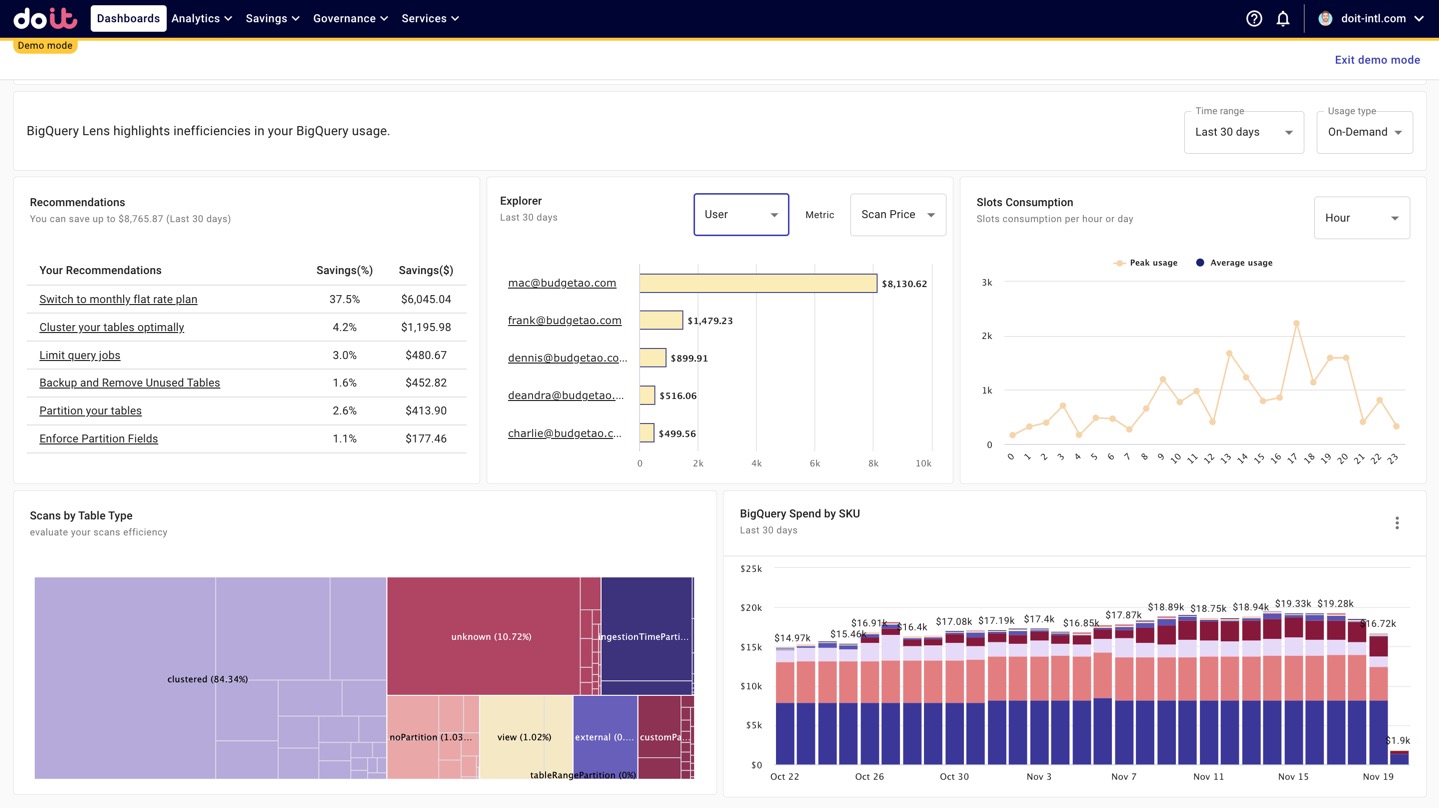

BigQuery Lens analyzes your BigQuery jobs and table metadata, producing actionable optimization recommendations along with a drill-down into your team’s usage statistics.

While you can spend time wading waist-deep through audit logs and queries trying to build dashboards through Cloud Monitoring or querying INFORMATION_SCHEMA.JOBS, these output only high-level metrics. You’ll still have to come to your own conclusions about what actions to take.

BigQuery Lens makes implementing best practices simple.

Specifically, its recommendations are geared towards helping you:

- Reducing amount of data scanned by clustering or partitioning tables

- Reducing costs of your scheduled jobs

- Leveraging flat-rate pricing when it makes sense

- Lowering storage costs by removing unused tables

- Enforcing partitioned fields in your queries

- Shifting recurring jobs around to reduce slot purchase requirements

Reduce bytes processed by clustering and partitioning tables

Whether you’re querying a project using on-demand or flat-rate pricing, you’ll benefit in terms of cost and performance by reducing the number of bytes your queries process. For projects that use on-demand pricing, it will directly reduce analysis costs. While flat-rate pricing is fixed based on the number of slots you purchase, reducing bytes processed can indirectly help you lower costs because it reduces your average slots usage. This subsequently reduces the amount of slots you’ll need to purchase (more on that below).

And a good place to start is by clustering and/or partitioning your tables.

Clustering tables (and how to maximize its benefits)

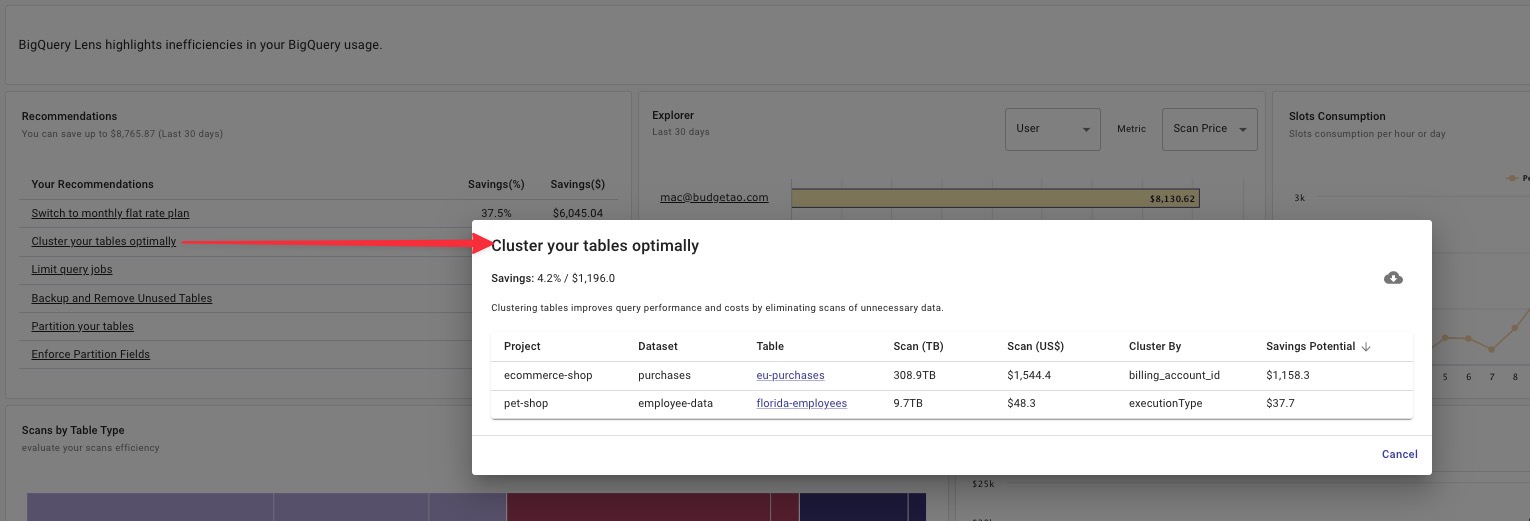

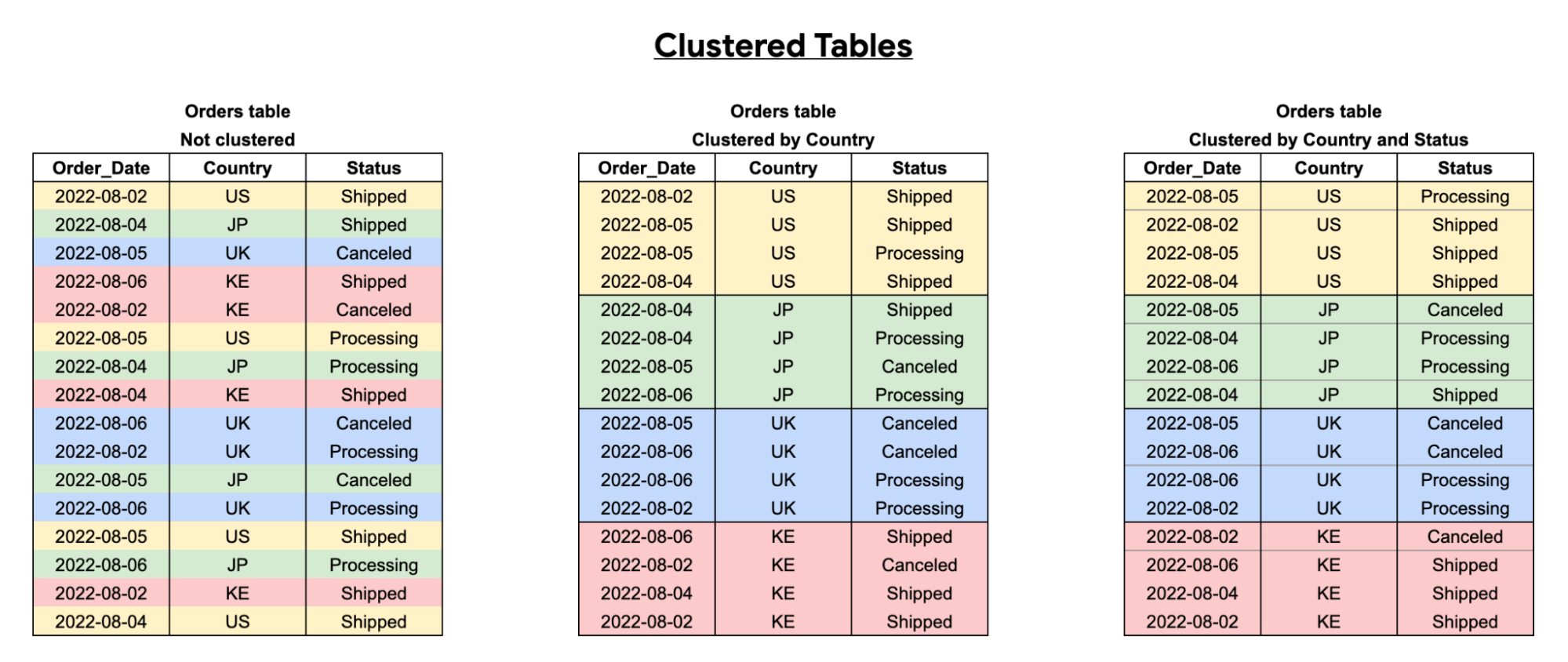

Clustering improves your query performance by arranging your table into blocks of data, based on the column(s) you cluster by. This makes it easier for BigQuery to scan only the relevant blocks of data — provided you structure your queries correctly.

However, the benefit you’ll get from clustering depends on which column(s) you cluster by — and in what order — plus how you structure your queries on clustered tables.

You should cluster tables by frequently-queried columns, especially when these columns contain many distinct values. In this scenario, BigQuery queries your tables more effectively by utilizing the order of the columns set by your clustering strategy on your tables.

As you can see in the diagram below, it’s also important to structure your queries based on the order of the clustering fields you select (i.e. filtering on your fields in clustered order). This will enable you to make maximum use of the clustering strategy you have set on your tables in your BigQuery jobs.

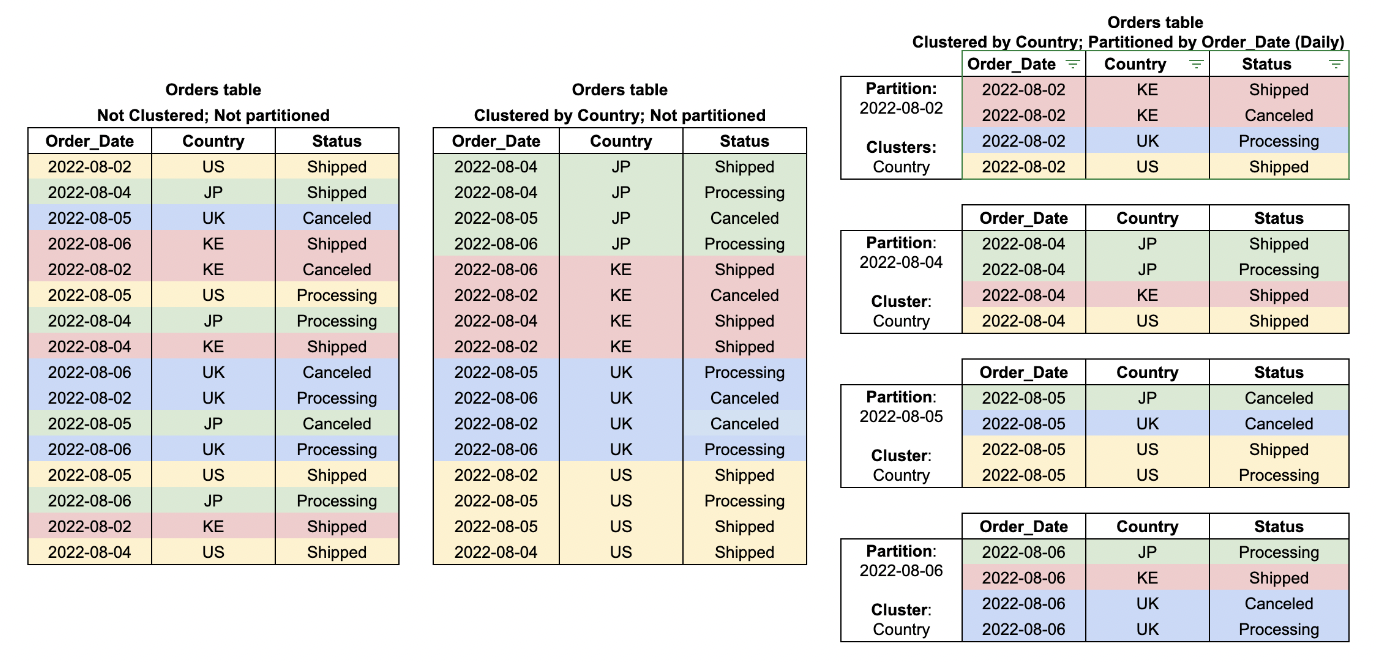

BigQuery Lens identifies tables you should cluster, highlighting the column(s) you should cluster by and in what order. All you have to do is click on the table name in the recommendation window to open it in your Google Cloud Console, and cluster the table.

It may also be worth benchmarking your previous queries on the table in question versus any jobs you have running on the table post-clustering. It’s extremely likely that you’ll see improvements in both the cost and execution time of your jobs running on that table.

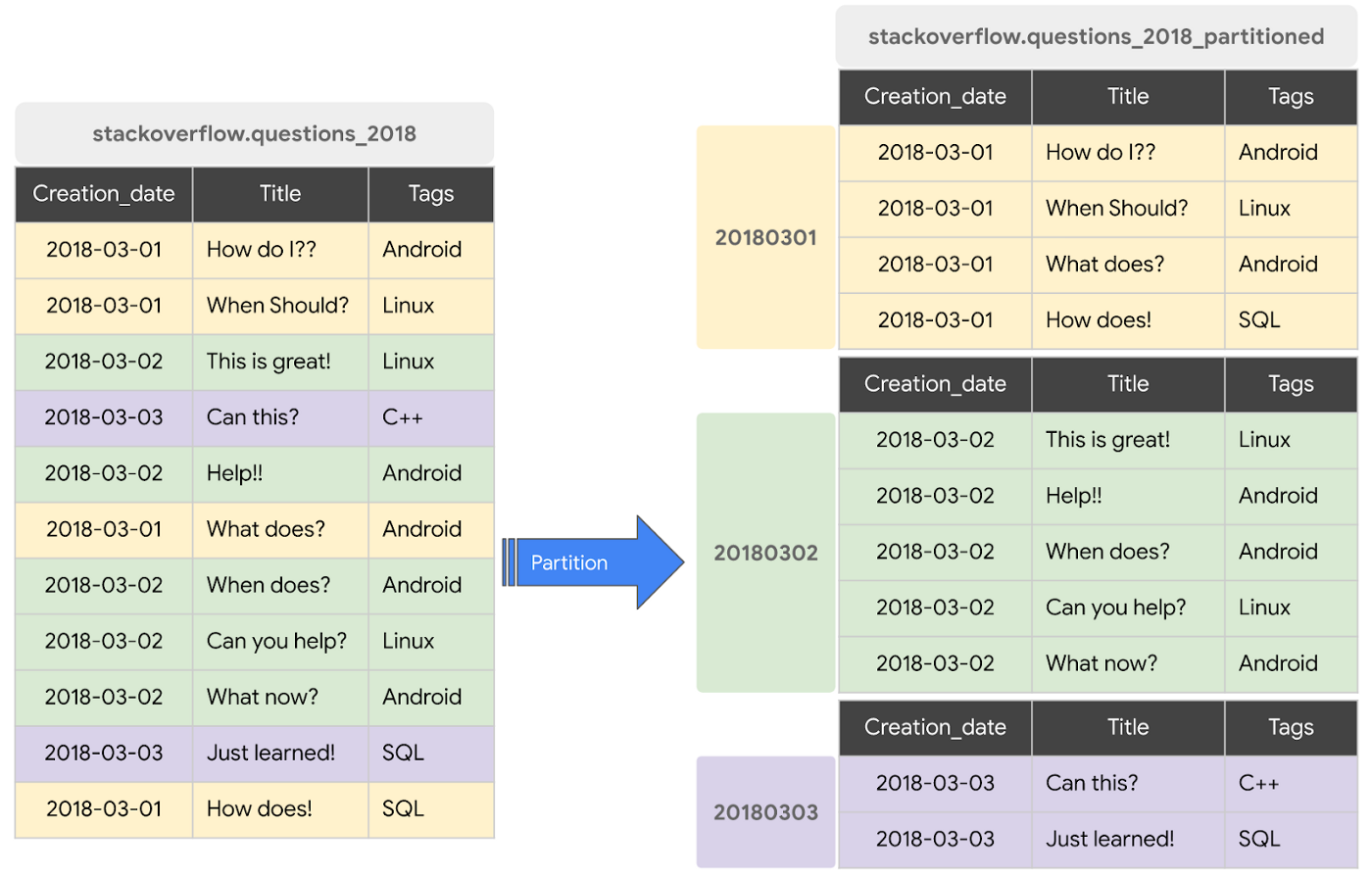

Partitioning tables (and why you should)

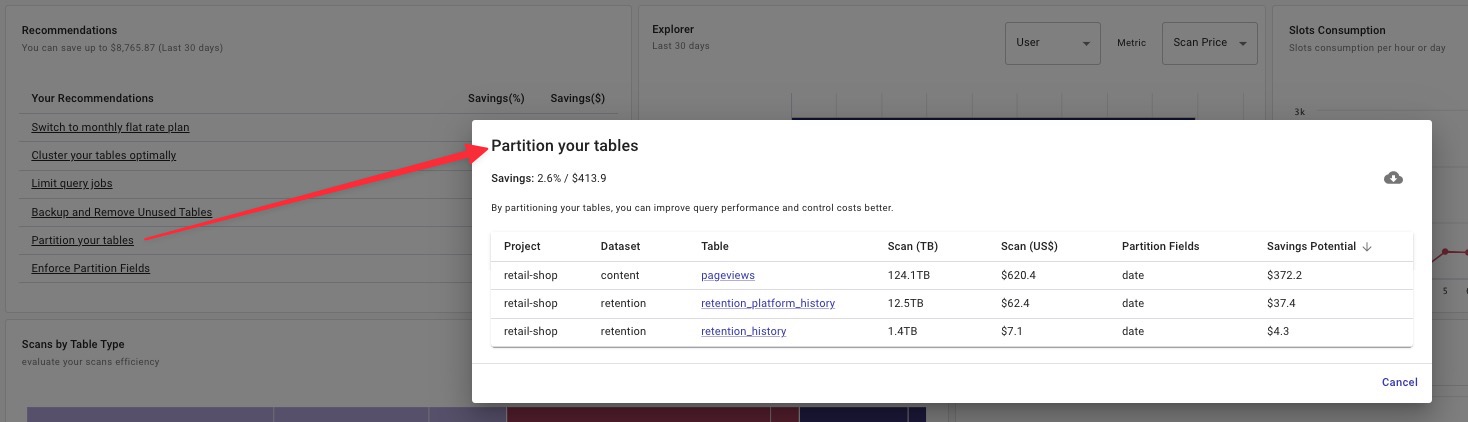

Partitioning tables helps control costs by dividing a large table into smaller chunks. Specifically, when you use partition pruning — filtering for specific partitions in your queries so you don’t scan the entire table – partitioning is effective.

It also gives you the ability to manage your data at a more granular level. You can set particular table partitions to expire or manage new input data more efficiently via the use of partitions in your BigQuery tables.

Similar to querying clustered tables, to realize the benefit of partitioned tables your query must use a qualifying filter on the value of the partitioning column.

Note: BigQuery Lens also identifies queries on partitioned tables that don't use a qualifying filter, but can (see “Enforce partition fields in your queries” below)

And just like with the clustering recommendation, BigQuery Lens also highlights which tables to partition, and on what field. While useful in general, if you have BI-powered dashboards running on BigQuery, implementing table partitions can make your dashboards faster and queries cheaper.

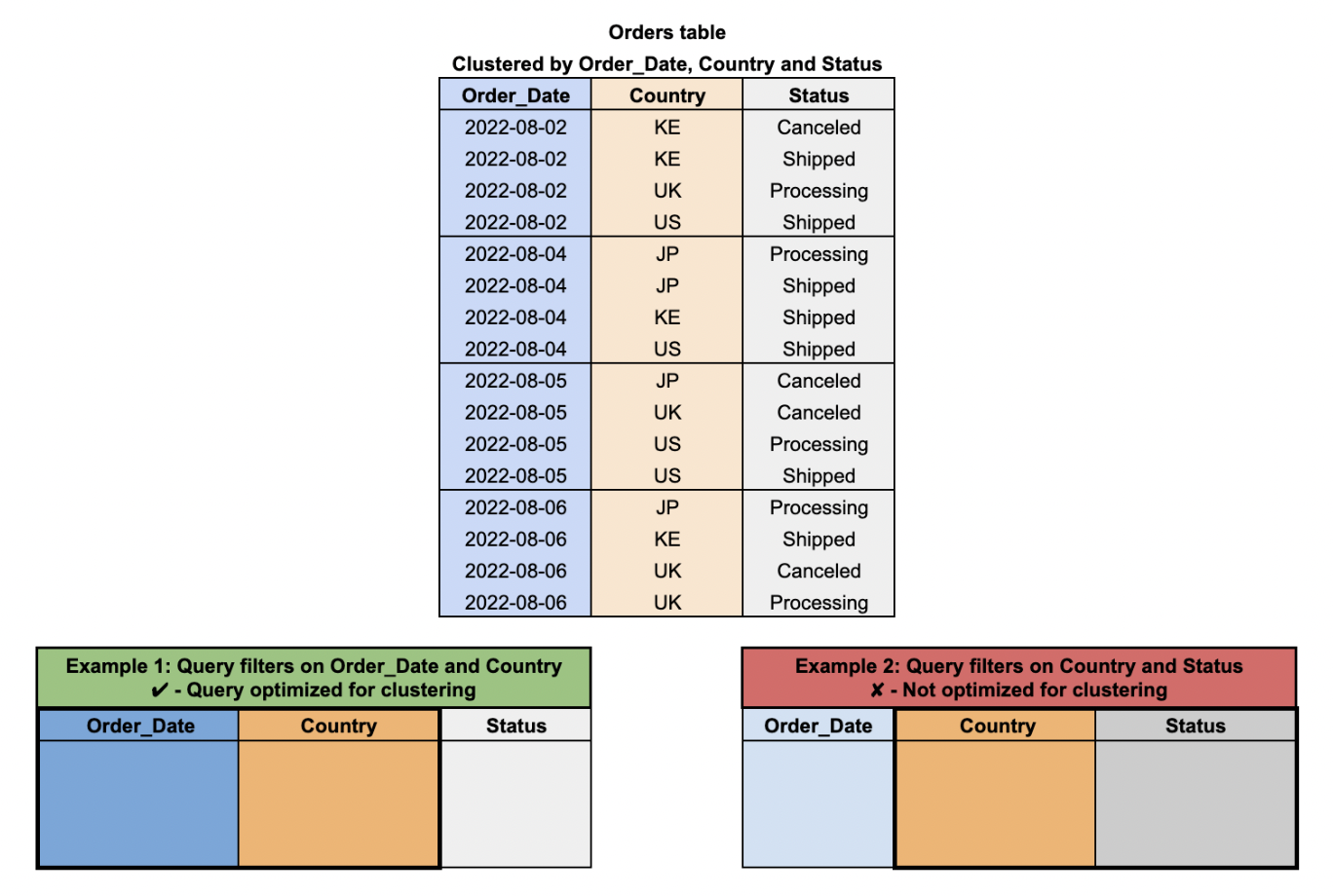

When to cluster AND partition a table

In some circumstances, it can be beneficial to use a strategy which combines both partitioning and clustering on your BigQuery tables.

This strategy is most useful when you want finely-grained sorting within your tables (see below diagram), while also giving you more transparency before running your queries on their likely costs due to the partition pruning capabilities of BigQuery.

However, bear in mind that combining the two strategies will lead to more metadata being maintained for your tables, while the benefits of clustering are also less likely to be realized if your tables are not often queried by common fields each time. This would negate the benefits of this particular strategy in BigQuery table structuring.

Switch to flat-rate pricing

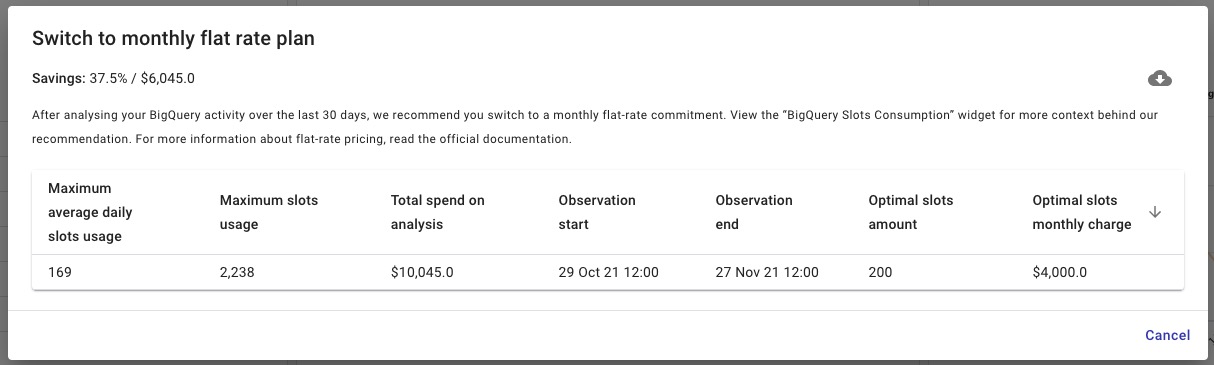

As your BigQuery use and data grows, you may reach a point where you’d save money switching from an on-demand pricing model to flat-rate.

When BigQuery Lens detects that you can reduce costs from doing so, it will display a slot commitment recommendation. In the example below, BigQuery Lens shows that our maximum average daily slots usage is 169 slots, and therefore recommends reserving 200 slots since you must purchase in 100-slot increments. We also see a much higher peak slots usage of 2,238 slots.

Note: BigQuery reservations and the on-demand pricing model are not mutually exclusive. You can switch between them using BigQuery Reservations, and even incorporate Flex Slots.

Since slots are a minimum one-month commitment, if you are uncertain about future query activity, extreme bursts or seasonality around your usage of BigQuery, you should be extra careful when purchasing them. This is where Flex Slots come in handy. Flex Slots are a way for you to purchase slots for short durations – as little as 60 seconds at a time.

For example, if you run all of your load/report jobs at 3 a.m., you could scale up and down easily by scheduling the provisioning and deprovisioning of Flex Slots with Cloud Composer. To avoid a situation where demand exceeds slot availability, one customer of ours even uses Cloud Functions to check every five minutes whether their Slot usage is >90% of their commitment, automatically provisioning Flex Slots so they don’t

Lower the frequency of your scheduled and other recurring jobs

You might have scheduled jobs running that don’t need to be executed as frequently as they are – or shouldn’t be executed at all. BigQuery Lens surfaces frequently-executed jobs and displays the potential savings from reducing the query’s execution frequency by 10-50%.

This recommendation helps inform better cost management conversations between you and your team and ensures that you’re only running particularly expensive jobs when needed.

For example, one customer noticed several jobs that didn’t need to be executed as frequently as they were. They brought this up with the wider team in a bid to reduce the frequency and calculated that they’d be able to save 10-15% on query costs with a slight reduction in number of executions.

Another customer noticed scheduled queries that were obsolete. Prior to using BigQuery Lens, they didn’t have visibility into this. The month after they canceled the unnecessary scheduled queries, they saw BigQuery - Analysis spend drop by over 50%.

Finally, if these recurring queries are generated from a BI-powered (i.e. Looker) dashboard, you have an opportunity to reduce costs by running the queries through BI Engine. BI Engine is perfect for dashboard queries because it intelligently caches BigQuery data in memory, resulting in faster queries. Since BI Engine caches data in-memory, the query stage that reads table data is free. Instead, you only pay for reserved memory capacity. However, if your project uses flat-rate pricing, subsequent stages use slots from your BigQuery reservation.

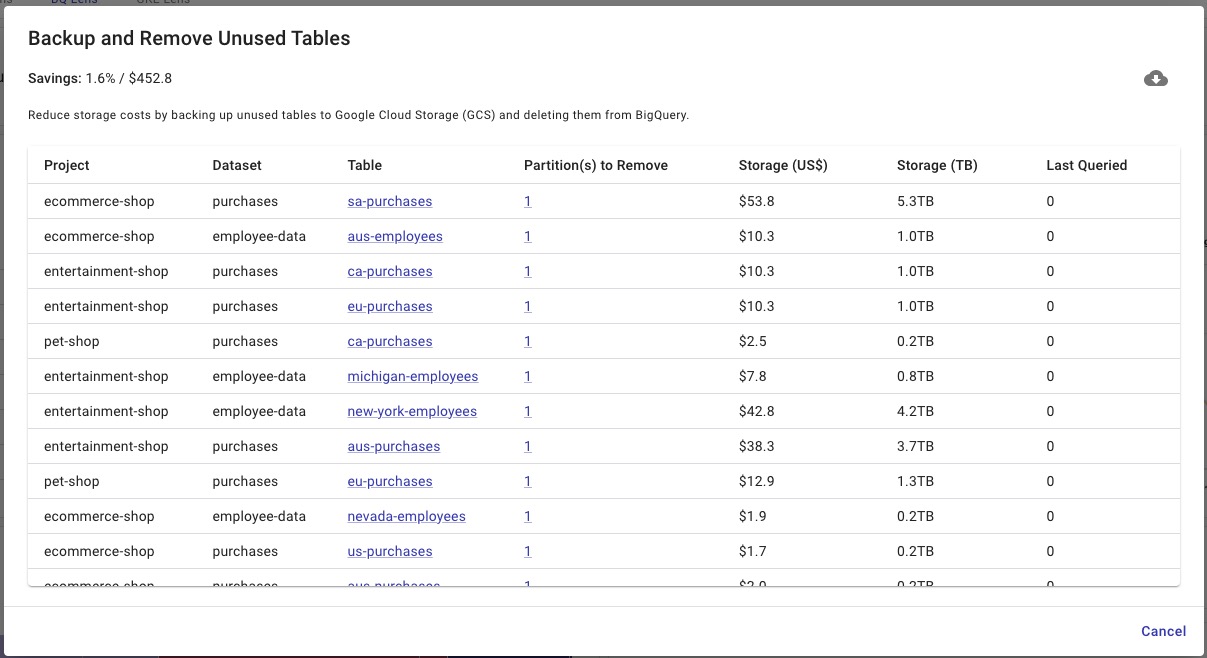

Save on storage costs by backing up and removing unused tables

Queries aren’t the only thing you can save money on – you’re also paying for storage in BigQuery! Unused tables represent what we’ve found to be an underutilized opportunity to lower BigQuery spend.

BigQuery Lens highlights tables (and partitions if they exist) that have gone unused for the last 30 days. You can reduce storage costs by backing up these tables to Cloud Storage and then removing them from BigQuery (or tweaking the table expiration settings).

Recently, a customer used this recommendation to surface various tables containing >3-years-old data that weren’t being queried. After backing up and removing these tables, they saw BigQuery costs drop by ~30%

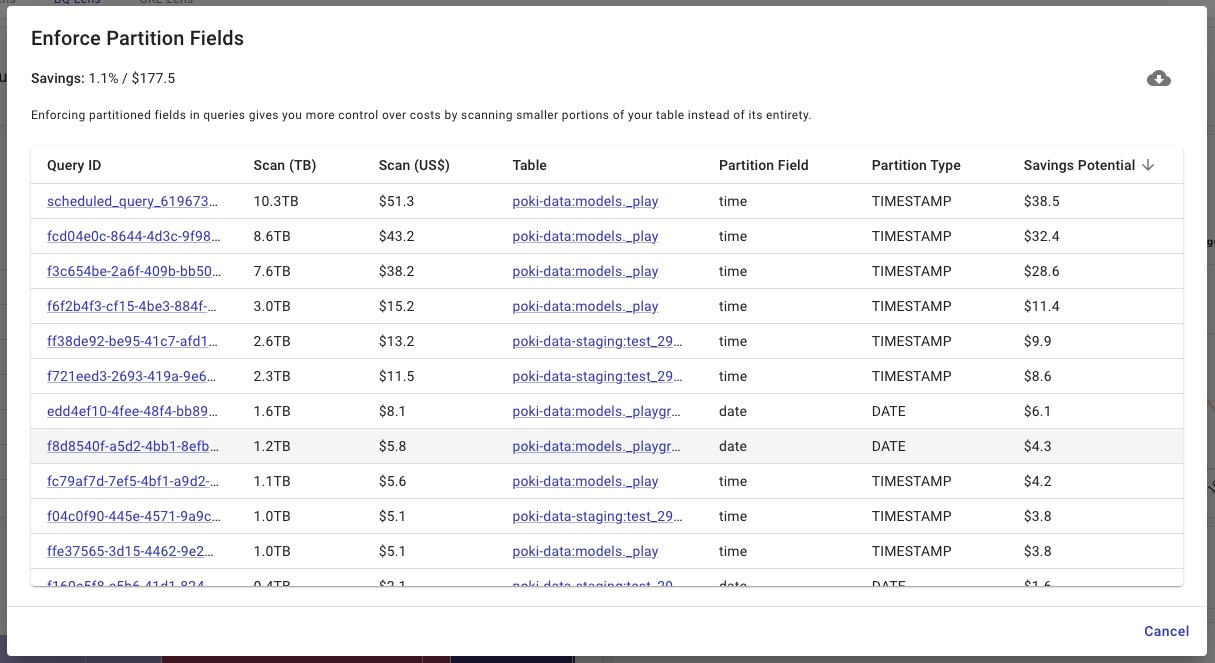

Enforce partition fields in your queries

Partitioning your tables is just half the battle. After partitioning, you need to make sure these tables are being queried effectively, using the field the table is partitioned on. This gives you more control over costs by scanning smaller portions of your table instead of the whole thing.

However, when managing a team of data analysts, it’s difficult to know whether everyone is actually including the partitioned field in their queries. BigQuery Lens identifies jobs querying partitioned tables where the partitioned field isn’t being utilized.

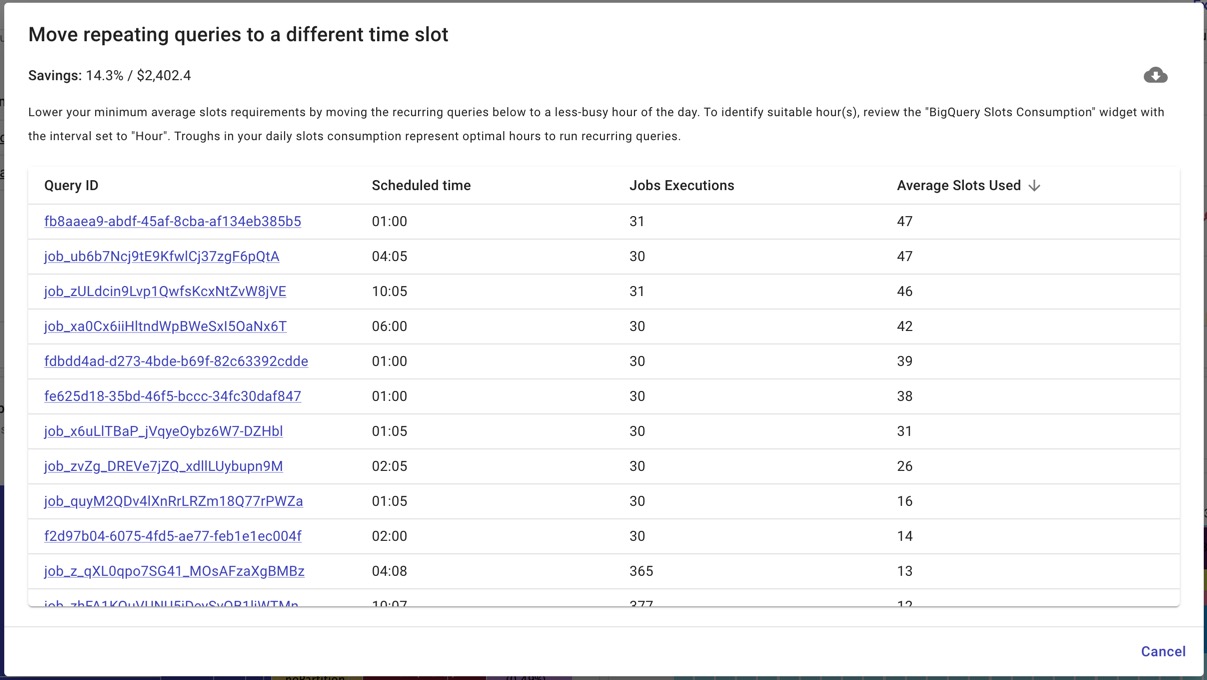

Lower flat-rate costs by moving recurring jobs around

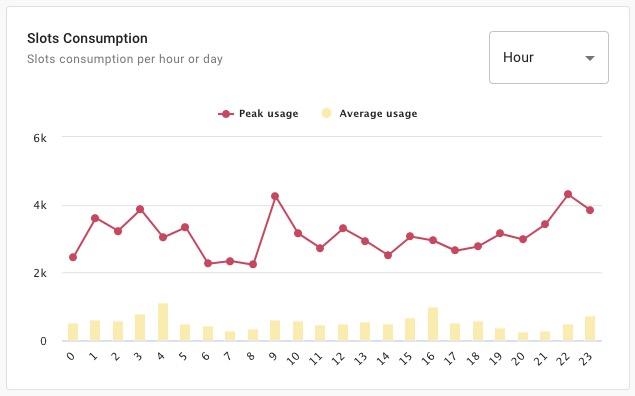

Think of optimizing your flat-rate costs as like playing a game of Tetris. Your goal is to stabilize your usage and have flat “blocks” of hourly slot consumption.

But typically you’ll have peaks and troughs in your average hourly slots usage (see chart below). And you might be basing your slots purchasing decisions on this inconsistent usage, spending more than you should.

To reduce your flat-rate costs, find recurring jobs that occur in these peak hours, and move them (if possible) to the hours when average hourly usage is low, or provision Flex Slots to handle the excess load.

Doing this flattens any peaks so that you have a lower, consistent slots usage pattern. As a result, you’ll need to purchase fewer slots and consequently lower your flat-rate costs long-term.

BigQuery Lens displays queries executed >30 times per month on projects using flat-rate pricing, along with the hour they’re executed in and the number of slots they consume.

You can then search for queries in peak hours and determine whether you can move them to hours when there are dips in usage. Using the chart above as an example, you should look for queries executed at 4 a.m. or 4 p.m. and move them to 7 a.m. or 8 p.m. if possible.

How to get BigQuery Lens

As with anything in the public cloud, if you’re not careful with how you use BigQuery, costs can rise rapidly. And with BigQuery representing the core of many companies’ data strategies, It’s not surprising to us that it’s consistently one of our most popular topics in terms of customer ticket requests.

As such, it’s important that you’re constantly optimizing for cost and performance. And it’s just as crucial that you understand how BigQuery is being used by your team, because you can’t optimize what you don’t know. BigQuery Lens helps you do just that.

If you’re not a DoiT customer and would like access to BigQuery Lens and our BigQuery experts, then get in touch with us.

If you’re already a DoiT customer, you should enable BigQuery Lens if you haven’t already.