The internet is vast and constantly growing. As of mid-2025, it hosts more than 1.25 billion websites and generates around 149 zettabytes of data annually. Over half of all traffic now comes from bots — many of them malicious. Against that backdrop, helping organizations protect their digital footprint has never been more crucial.

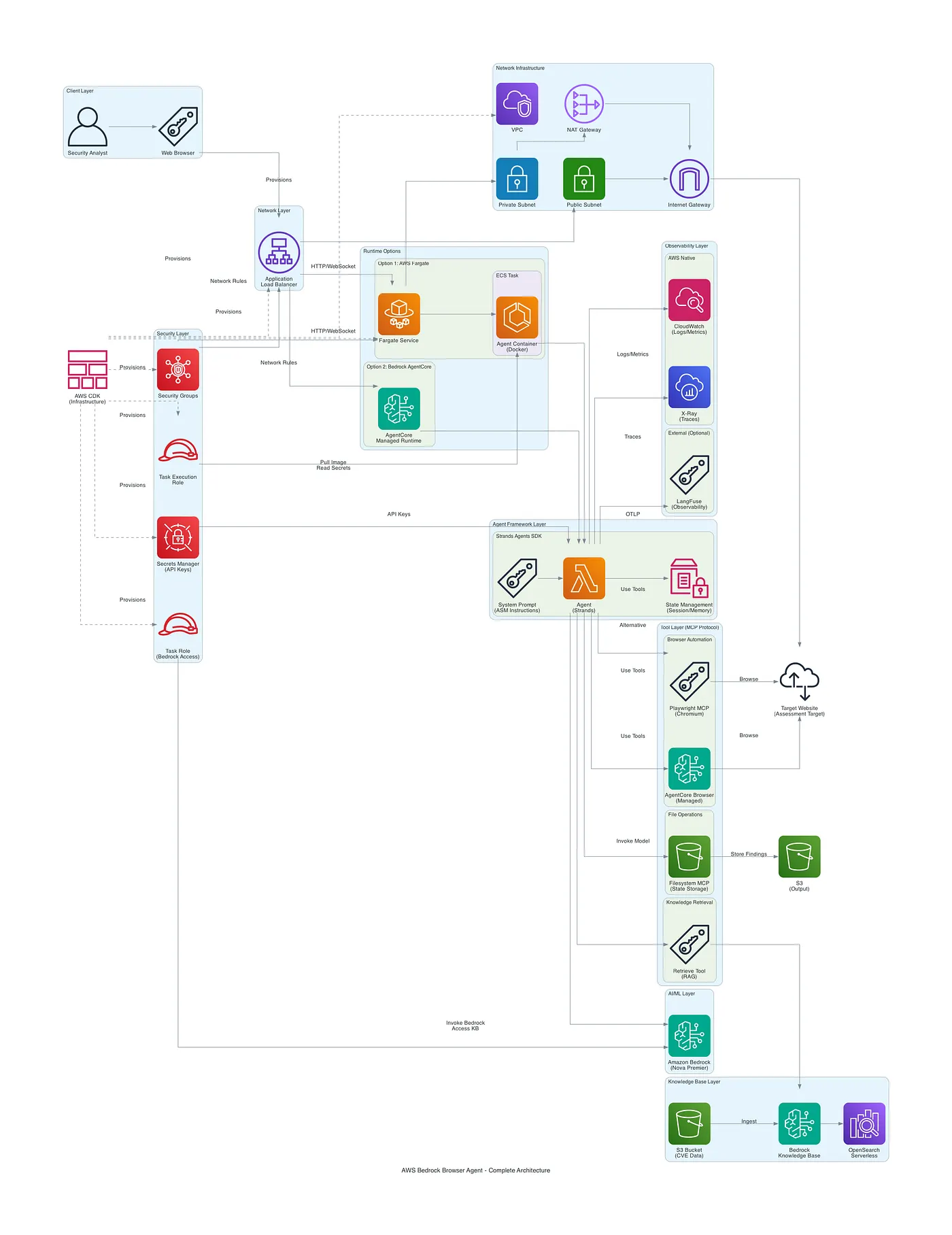

At DoiT, we worked with a customer specializing in Attack Surface Management (ASM) to explore how modern AI could automate and scale parts of this process. The goal: an agent that can browse the web, analyze a client’s exposed assets, and identify potential vulnerabilities — all running on AWS.

Designing the solution

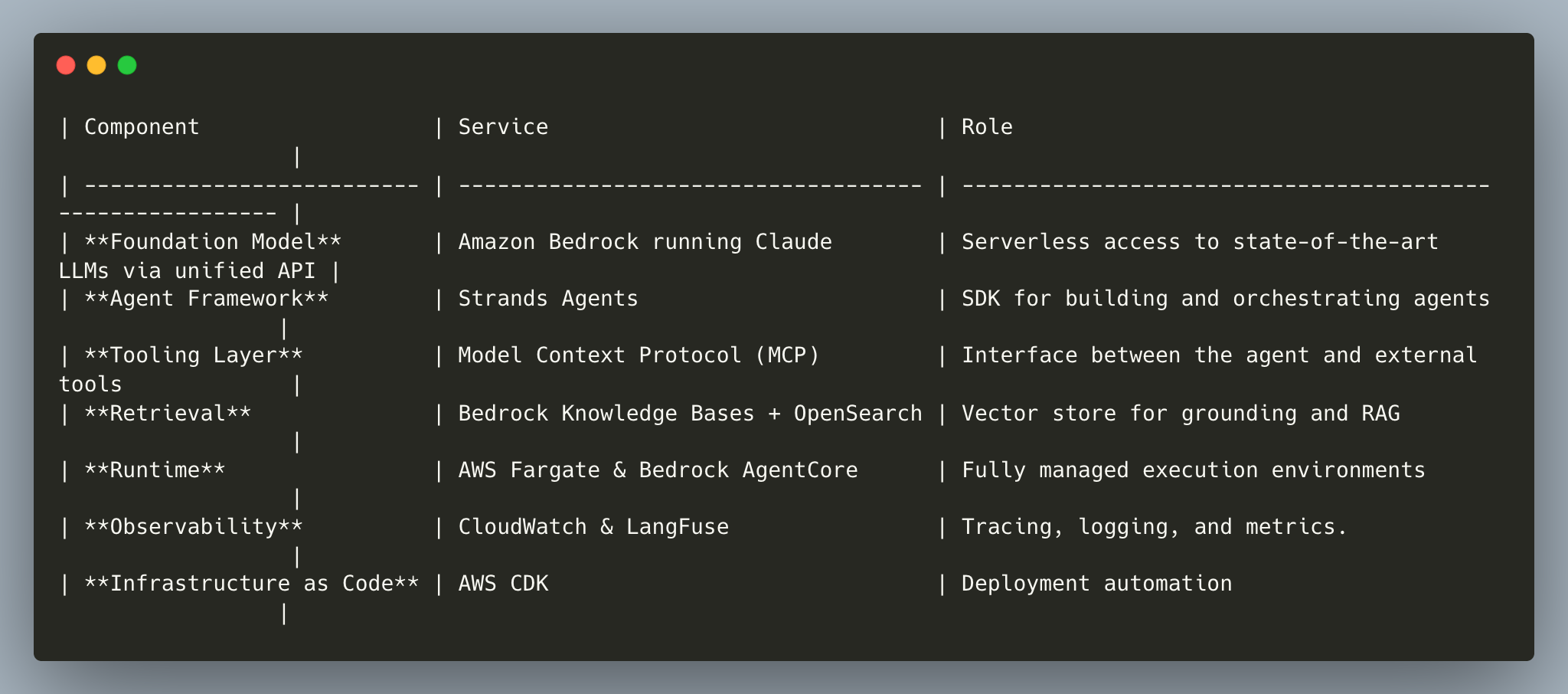

We architected a system on top of Amazon Bedrock and Strands Agents, combining reasoning models, browser automation, retrieval-augmented generation, and full production observability.

The core AWS components included:

And how they fit together:

Let’s dive a bit deeper into each of these in the next sections!

Reasoning Models and Agent Framework

Let’s start from the foundation: how the agent “thinks.” AWS Bedrock provides easy access to advanced reasoning models such as Amazon’s Nova family, Anthropic’s Claude models, and many open source models like the Mistral, DeepSeek, or Llama models. These models already support chain-of-thought-style reasoning, allowing the agent to produce intermediate “thinking” steps before arriving at conclusions and taking actions. That reasoning capability is essential because each browsing action and observation builds on the last.

For orchestration, Strands Agents provides an elegant abstraction over the agent loop: at its core, a cycle of reasoning, tool use, and response generation. It integrates seamlessly with Bedrock models and offers production-grade primitives for session state, multi-agent coordination, and context management.

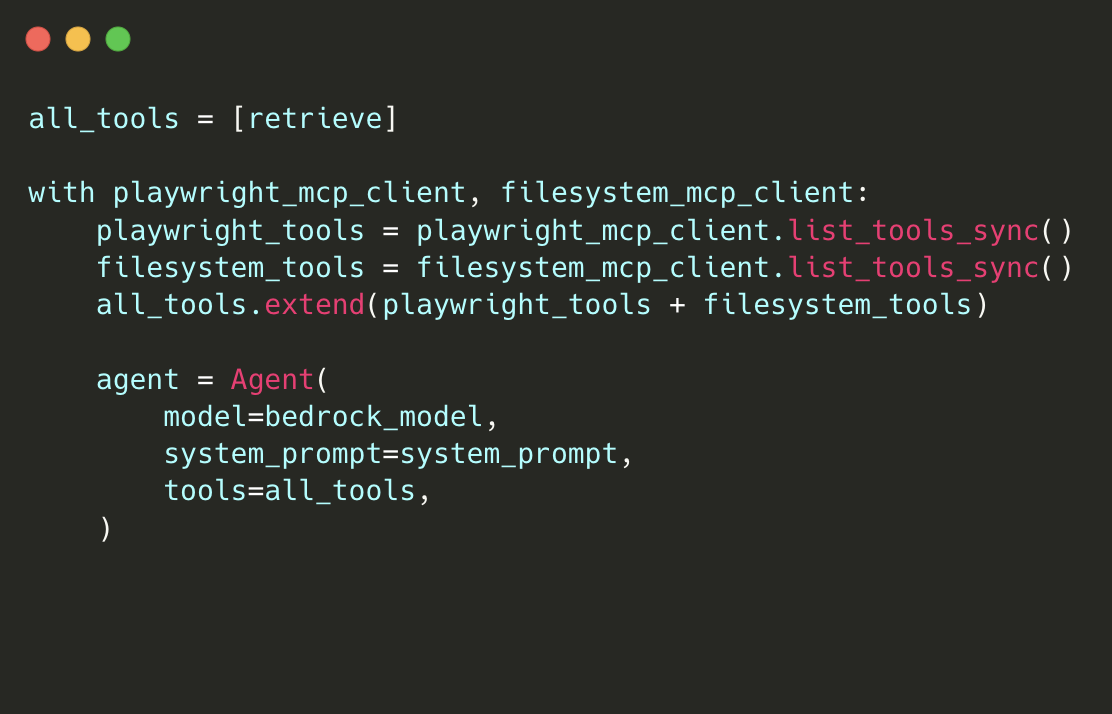

A small code sample from the agent to give you a sense of how simple Strands makes developing an agent:

This loop lets the agent autonomously browse, reason over the findings and persist the state between steps.

Acting Through Tools and MCP

But reasoning alone isn’t enough — the agent must interact with the world.

Tooling was enabled through the Model Context Protocol (MCP), an open standard that connects LLMs to external systems. Each MCP server exposes a catalog of “tools” with clear definitions and schemas, which the agent can call dynamically at runtime.

For our use case, we combined three tool sources:

retrieve: for semantic querying of the vulnerability database.- Playwright MCP: for web browsing and website interactions.

- Filesystem MCP: for simple persistent storage and logging.

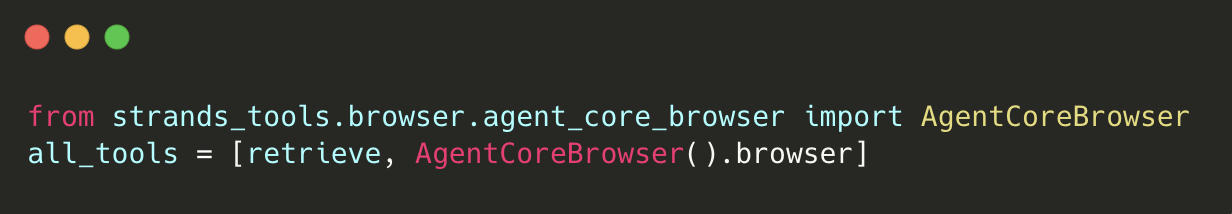

While we were developing this, in August 2025, AWS introduced AgentCore, that comes with its own Browser Tool, removing the need to manage our own Playwright infrastructure. It provides a fully managed, isolated browser environment with IAM integration and CloudTrail observability — and dropped neatly into the existing code:

The modularity of Strands made it trivial to switch from self-hosted browser tooling to a more secure and scalable managed service.

Grounding with Bedrock Knowledge Bases

To help the agent reason with real-world data, we grounded it in the CVE™ Program database, a repository of known vulnerabilities.

Using Amazon Bedrock Knowledge Bases, we uploaded the CVE dataset, which AWS automatically chunked, embedded, and indexed into OpenSearch Serverless, ready to be queried.

The retrieve tool then allowed the agent to query this vector store at runtime, giving it up-to-date knowledge of vulnerabilities relevant to each client asset we were encountering. Bedrock Knowledge Bases’ managed ingestion and retrieval pipeline saved significant engineering effort compared to building a custom RAG flow from scratch.

Deploying the Agent on AWS

After validating the prototype locally, we brought the solution to production using two managed runtimes:

1. AWS Fargate

A containerized deployment packaged via Docker, orchestrated with AWS CDK. This setup provided full control over scaling and networking, making it ideal if you want more control or have specialized dependencies (like the MCP servers).

2. Amazon Bedrock AgentCore

AgentCore offers an even higher-level abstraction: you define the agent and its configuration, and AWS runs it for you.

With a few code adjustments — mainly switching from filesystem storage to Strands state system — the same agent ran fully managed, no CDK or VPC configuration required, just through using agentcore configure and agentcore launch from the Agentcore starter kit. For rapid iteration and minimal operational overhead, this approach was unbeatable.

Observability and Evaluation

Monitoring agent behavior is as critical as designing it.

For teams preferring external analytics, LangFuse plugged in effortlessly via OpenTelemetry, giving a fine-grained timeline of loops, model calls, and tool invocations. This gives you a great step-by-step insight into what your agent is “thinking”, and which tools it chooses to use, which is crucial for debugging and continuous improvement.

After the AgentCore launch, AgentCore Observability became available as well, integrating seamlessly with CloudWatch, which now has a GenAI Observability dashboard, capturing traces, metrics, and logs across every invocation. Developers can visualize token usage, error rates, and help you inspect sessions, turning the black box of LLM reasoning into measurable data.

Monitor Your Agent’s Spending with DoiT Cloud Intelligence

Not unrelated to observability, understanding cost impact before production deployment should also be top of mind.

At DoiT, we’ve launched the GenAI Lens, part of our DoiT Cloud Intelligence™ platform, to help you analyze the spending patterns of generative-AI workloads.

It integrates directly with Amazon Bedrock, as well as Anthropic and OpenAI, giving you visibility into which models and workloads drive your costs.

For deeper analysis, DataHub lets you embed data ingestion directly into your application. With labeling and custom dashboards, you can track costs per domain or customer — even calculate a cost per vulnerability found, turning security insights into measurable ROI.

Looking Ahead

From data ingestion to reasoning and observability, the AWS ecosystem provided all the building blocks to bring this autonomous ASM agent to life — securely, scalably, and with minimal infrastructure management, especially with AgentCore now GA.

We ran tests on testphp.vulnweb.com, which showed our system is able to detect SQL Injection, Reflected and Stored XSS, Authentication Bypass, and even active site compromise scenarios. These findings validated that the agent can autonomously traverse web flows, input payloads, interpret execution evidence, and correlate results against the CVE database — all with minimal human oversight. Beyond technical accuracy, it demonstrated the value of coupling autonomous reasoning with real-time retrieval and observability, turning raw vulnerability scans into structured, explainable intelligence.

There are more possibilities: refining reporting capabilities, evaluating performance on known benchmarks, and integrating extra vulnerability databases. But even in its current form, this project demonstrates how AWS Bedrock + Strands Agents can translate the promise of generative AI into operational value in cybersecurity.

All source code and implementation details are available on GitHub and a long-form version here.

—

Join us in making your FinOps journey easier: talk to us via doit.com/services!